3.2. How the operating system manages memory

Now that we know the different types of memory available to your computer, it is time to look at how we as programmers can work with memory. In this section, we will learn about the role that the operating system plays in managing the hardware memory resources, and the routines that it provides to other programs for accessing this memory.

Physical and virtual addressing

When we talk about memory in a computer, what we mean is a collection of identical memory cells that can store data in the form of bits. It has been shown to be convenient to talk not about individual bits, but group them together into one or more bytes at a time. If we then think of our memory as a collection of cells that can hold one byte worth of data each, we need a way to uniquely identify each of these memory cells. We do this by assigning each memory cell a number called its memory address. We then refer to the process of mapping between a memory address and its corresponding memory cell as addressing.

The simplest form of addressing is called physical addressing: Here, we just use the number of each memory cell as the address for our instructions. A corresponding instruction for loading the value of memory cell 3 into a register might look like this:

mov eax, BYTE PTR 3 ; move the byte at address 3 into the eax register

While this is the simplest and certainly the most conventient way of addressing memory, it is not the only way. In fact, physical addressing is seldom used in general purpose computers, for a variety of reasons:

- We can never address more memory than is physically present on the target machine

- If we have multiple processes, it becomes hard to assign memory regions to these processes. We would like separate processes to have separate address ranges, so that they don't interfer with each other. We also would like to be able to grow and shrink the amount of memory that each process can access dynamically

- How do we make sure that programs written by the user don't accidentally (or maliciously) overwrite memory that the operating system is using?

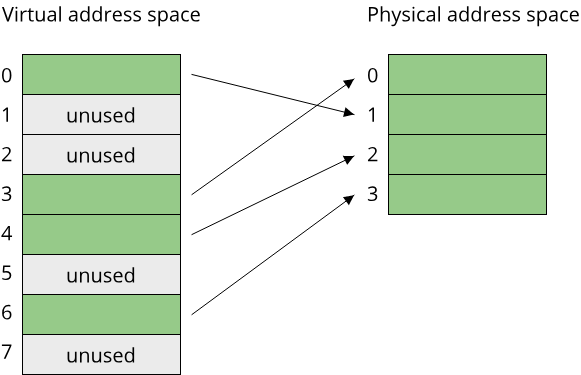

All these problems can be solved by introducing a layer of abstraction between the physical memory cells and the memory addresses. Instead of using physical addressing, we can use virtual addressing. With virtual addressing, we introduce a mechanism that maps a memory address from a virtual address space to a memory address from the physical address space. An address space in this context is a contiguous range of numbers from 0 to some maximum number N. If we have k bytes of physical memory, then the physical address space of this system is the range of numbers [0;k). We can then use a virtual address space of an arbitrary size, often one that is much larger than the physical address space, to generate virtual addresses from. Let's say we use a virtual address space of size 2k, so virtual addresses are in the range [0;2k). We now need a mechanism that maps from a virtual address to a physical address. Since we might have more virtual addresses than physical addresses, we need a way to perform this mapping without running out of physical space. A good way to achieve this is to a secondary storage medium as a cache for our memory cells. In practice, disk space is used, because most systems have more disk space available than working memory. Since the idea of a cache is to give faster access to data, in this concept the working memory actually serves as the cache for an address space stored on disk, and not vice versa. This might appear weird at first: With this concept, we are refering to memory addresses on disk instead of those in working memory, and we only hope that the data is in working memory as a cache. However note that in principle, there would be no need for a computer to have working memory at all. The only reason it has is that working memory is faster than disk memory, but from a purely functional point of view (remember the Turing machine?) any type of memory cells would be sufficient.

Virtual memory

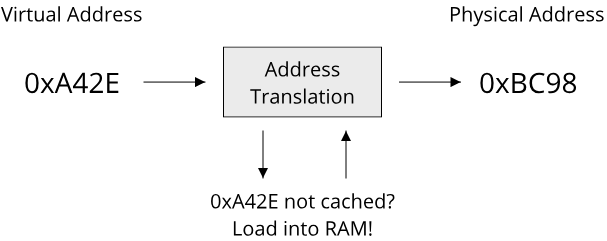

The concept which is described here is called virtual memory and is one of the cornerstones of modern operating systems. It works like this: Suppose your program wants to store a variable that is 8 bytes large. It can then talk to the operating system and request access to a contiguous memory region that is 8 bytes large. The operating system allocates an 8 byte region from a virtual address space, and hands this memory region back to the program. A memory region in this context is nothing more than a contiguous interval in the virtual address space. In C/C++, it would be represented by a pointer, or a pair of pointersThe fact that memory is represented by a single pointer in C/C++ is one of the reasons why memory management can be tricky in these languages. A pointer itself is just a number, containing no information about the size of the memory region that it refers to! . If your program now reads from this memory region, the virtual address range has to be translated into a physical address range. This is just a mapping from one number to another number, where the resulting number is the physical address of a memory cell on disk. Now comes the clever part: During this address translation process, the operating system checks that the physical address is cached in working memory. If it is not, it is automatically loaded from disk into working memory. This way, even with virtual addressing, data is always physically accessed in working memory.

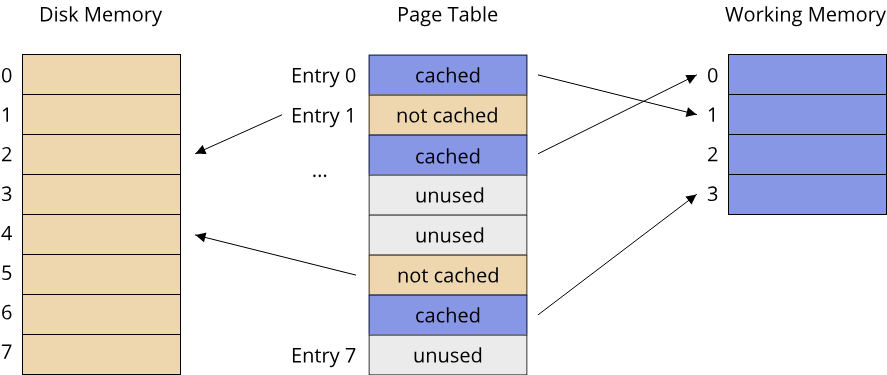

To perform this mapping between addresses, a data structure has to be kept somewhere that stores which virtual address maps to which physical address. Keeping such a mapping for every single byte in the address spaces would result in an unreasonably large data structure. Instead, the mapping is done based on contiguous memory regions called pages (sometimes also called virtual pages). These pages are in the order of a few KiB to a few MiB in size on an average computer today. Using pages makes the caching process easier and reduces the number of address mappings to keep in the internal data structure. A pretty standard page size on Unix-systems is 4KiB, which requires only 1/4096th the number of mapping entries than the naive approach of mapping every byte. Fittingly, the data structure that stores these mappings is called a page table.

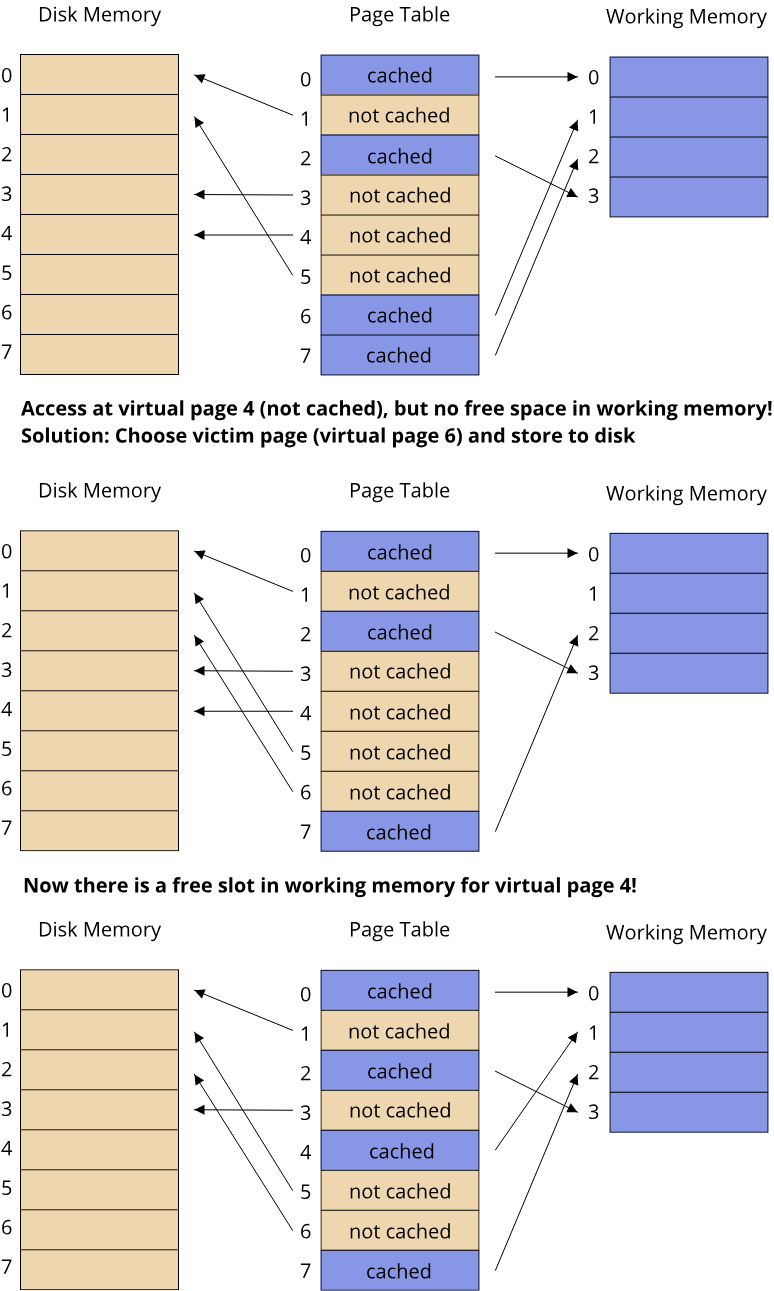

Let's examine the usage of pages more closely. We saw that the operating system manages these pages and their mapping to memory regions in the physical address space. Whenever a data read or write is issued to a page that is not cached in working memory, this page has to be loaded from disk into working memory. This situation is called a page fault. This is a two-step process: First, a suitable address region in the physical address space has to be determined where the page is to be loaded into. Then, the actual copy process from disk into working memory is performed. This copy process takes time, the more so the larger the page size is, which results in a conflicting requirement for an 'ideal' page size. Small page sizes are good for caching, but result in very large page tables, large page sizes give managable page tables but make the caching process slower due to the overhead of copying lots of data from disk to working memory. Now, in order to perform the process of loading a page from disk into working memory, we need a way of determining where into working memory the page should be loaded. Working memory usually provides constant-time random access to any memory cell, so any region in working memory is as good as any other region for our mapping. We thus only have to identify a contiguous memory region as large as the page size that is not currently in use. How to identify such a memory region will be left as an exercise for the lab. For now, assume we found a suitable region. We can then record the mapping between the virtual page and this memory region inside the page table. We call these regions of working memory that contain data for a page the physical page or page frame.

Remember how we said that our virtual address space can be larger than the physical address space? This is quite common in practice. Older hardware architectures, such as the i386 architecture by Intel (the predecessor of the x86-64 architecture that we saw in chapter 2.3), used a virtual address space that was 4GiB large, using the full 32-bit word size of these processors to address virtual memory. For a long time, most systems had significantly less working memory than these 4GiB, but as technology has advanced, systems with this much or more working memory became commonplace. In order to address more memory, modern architectures (such as x86-64) use significantly larger virtual address spaces. On x86-64 systems, where processors have a word size of 64 bits, the virtual address space is typically 48 bits largeWhy 48 bits and not the full 64 bits? Hardware limitations, mostly. 48 bit virtual addresses give you 256TiB of addressable memory, which was and still is considered to be sufficiently large for computers of the forseeable future. Keep in mind that 64-bit architectures were conceived in the late 1990s, so almost 25 years ago as of writing. Even as of today, there is basically no consumer hardware that has even 1TiB of working memory., yielding a virtual address space that is 256TiB in size. It is highly unlikely that the average system will have this much physical memory available, especially not as working memory. This leaves us in an unfortunate situation: Since the virtual address space is singificantly larger than the physical address space, when we encounter a page fault there is a real chance that all of our working memory is already occupied by other pages and no space is left to load in the data for the requested page. In this scenario, we have to select a page that is cached in working memory and have to evict it, which is to say we store its contents on disk and make room for a new page to be cached in working memory.

The process of evicting records from a cache to make room for other records is a fundamental property of any cache. There are many strategies for selecting the appropriate record to evict, all with their own unique advantages and disadvantages. Instead of discussing them here, researching popular caching strategies and implementing a simple virtual memory system based on an appropriate strategy will be a lab assignment.

Virtual memory hands-on

Up until now, we discussed the idea virtual memory in a fairly abstract sense. In this section, we will look at the whole process of virtual memory mapping and page faults using some real numbers to get a good feel for it.

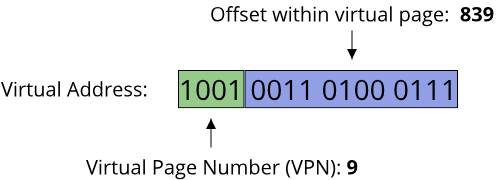

Assume a system with a virtual address space (VAS) of 64KiB size, a physical address space (PAS) of 16KiB size, and a page size of 4KiB. We can represent all virtual addresses using 16-bit numbers. Since each page is 4KiB in size, there are 64KiB / 4KiB = 16 virtual pages in the virtual address space, and 16KiB / 4 KiB = 4 physical pages in the physical address space. If we look at a single virtual memory address, we can thus use the 4 most significant bits for the page number, and the lower 12 bits for the byte offset within a single page:

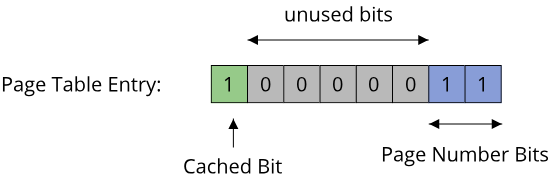

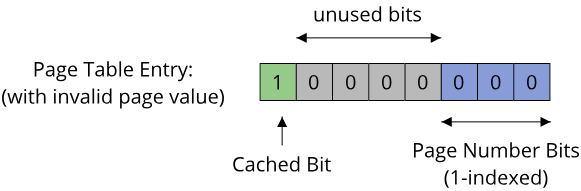

Since the page size is equal for both the virtual and physical address space, the lower 12 bits will tell us both the offset within the virtual page (VPO) as well as the offset within the physical page (PPO). What is different is just the page number. To perform our mapping between virtual page number (VPN) and the physical page number (PPN), we use the page table data structure. This page table is effectively a map between VPO and PPO or and invalid record, indicating that the given virtual page is not currently cached in physical memory. Recall that pages that are not cached in working memory are instead stored on disk, so our invalid record has to identify the location of disk where the given page is stored instead. This can be a binary offset within a file on disk, for example. A common strategy to keep the size of the page table entries (PTE) low is to encode this information in a single number, with a single bit indicating whether the physical page is cached in working memory or resides on disk, and as much bits as are needed to represent the maximum PPO. In our example, there are just 4 physical pages, so we would need only 3 bits in total for a PTE, but we will store them as 8-bit numbers instead:

Initially, the page table starts out with 16 empty records, because none of the virtual pages from the virtual address space have been used yet. We need a special value to indicate that a virtual page is unused, we can use the value 0 for this and switch to 1-based indexing of the physical pages. Using 0 has the advantage that the highest bit is already set to zero, indicating that the page is not cached in working memory. As a downside, we need one more bit for addressing, however we have plenty to spare:

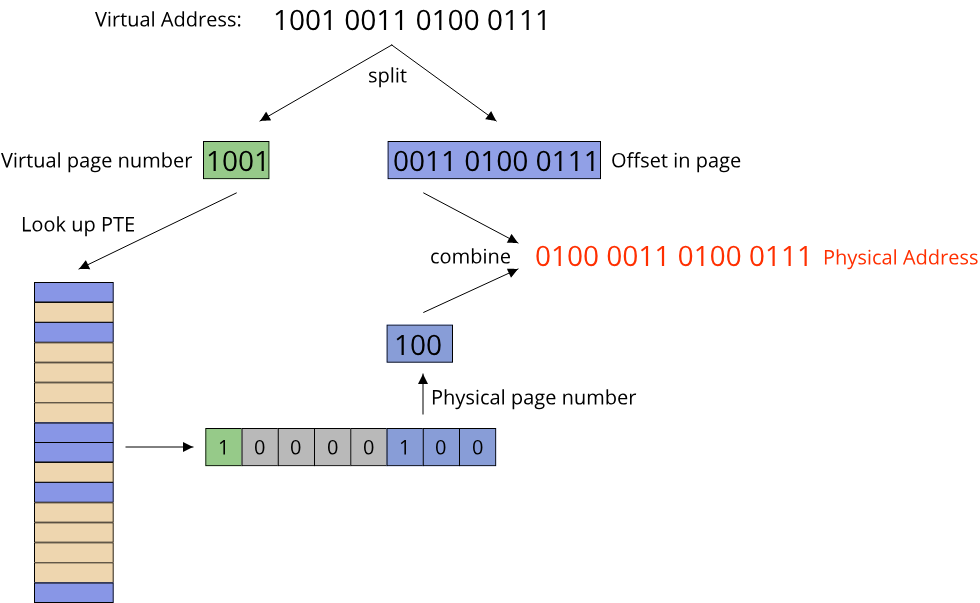

Converting a virtual address to a physical address then works like this:

- Split the virtual address into VPN (the upper 4 bits) and VPO (the lower 12 bits)

- Use the VPN as an index into the page table

- If the PTE indicates that the page is not cached in working memory (highest bit is zero), load it into working memory and adjsut the PTE accordingly

- Get the PPN from the PTE

- Concatenate the PPN and the VPO to get the physical address

Virtual memory in practice

Virtual memory is an old concept that has seen many improvements over the years. As most things in computer science (or life, for that matter), reality tends to be more complex than the examples that we use to learn concepts. In this section, we will briefly look at some of the aspects that make virtual memory work in real operating systems.

While we talked about the process of address translation, we haven't talked about where exactly it happens. Take a look again at a machine code instruction that performs a memory load:

mov eax, BYTE PTR 3 ; move the byte at address 3 into the eax register

If this instruction is executed by the CPU, and the address 3 is a virtual address, then who does the translation into a physical address? The answer is: A specialized piece of hardware right on the CPU, called the memory management unit (MMU). Having the address translation being implemented in hardware is much faster than in software. In order to do the address translation, the MMU needs access to the page table, which has to reside somewhere in memory itself. As we saw, loading data from main memory can take up to a few hundred CPU cycles, which is a cost that we definitely do not want to pay every time we want to translate a virtual address into a physical address. As so often, caches are the answer here: A modern CPU will have a small cache for page table entries that provides very fast access to the most recently used page table entries. This cache is called a translation lookaside buffer (TLB).

The management of the page table itself is done by the operating system. To keep processes separated, each process has its own unique page table, giving each process a unique virtual address space. If two processes both want access to the same physical page, the operating system can map two virtual pages from the two processes onto the same virtual page for an easy way to share data between processes. Additionally, the page table entries will also store more flags than the ones we have seen: A page can be read- or write-protected, allowing only read or write access, and pages can be reserved for processes that are running in kernel mode, preventing user mode processes from manipulating them.

Lastly, a page table must be able to map every virtual page to a physical page, so it must be capable of holding enough entries for every possible virtual page from the virtual address space. With 4KiB pages on an x86-64 system, which uses 48-bit addressing, there are 2^36 unique virtual pages. Assuming 8 byte per page table entry, a full page table would require 512GiB of memory. Clearly this is not practical, so instead a hierarchy of page tables is used in practice. The lowest level of page table refers to individual pages, the next higher level refers to ranges of page table entries, and so on. The Intel Core i7 processor family for example uses 4 levels of page tables.

Summary

In this chapter, we learned about the concept of virtual memory. We learned how virtual addressing works, how to translate virtual addresses to physical addresses, and what the benefits of this approach are. We saw how the operating system manages virtual memory using page tables, learned about page faults and how working memory is used as a cache for the physical address space. Lastly, we saw that additional hardware and more complex page tables are used in practice to make virtual memory viable.