Memory management basics

The way dynamic memory allocations work in C, through manually calling malloc and free, is one of the main points of frustration for programmers coming from higher-level programming languages such as Java or Python, which manage dynamic memory automatically. It is also one of the major points where bugs can manifest in a program. Calling malloc but forgetting to call free, or accidentally calling free twice, are popular errors that even experienced programmers tend to make, especially in large code bases. A large part of systems programming language design over the last decade and more has been focused on preventing these bugs through clever language features, while at the same time retaining the full control over memory allocations to the developers. We will explore which strategies are in use today in both C++ and Rust and build a solid understanding of how these strategies came to be and how they work under the hood. Building this understanding requires three things:

- Understanding composite datatypes (i.e. the

classandstructkeywords) and how they relate to memory - Understanding memory allocation

- Understanding value semantics

Composite datatypes

Let's start with composite datatypes. In C++, these are the types you create when writing class or struct. As a reminder, the only difference between class and struct in C++ is the default visibility of members (public for struct, private for class). A composite type is a type that combines primitive types (int, float, pointers etc.) and other composite types into more complex types. For a precise overview of all the different types in C++, check out the Types page on cppreference.

When we create a variable of such a composite type, the compiler has to figure out how much memory is required for this variable. For this, the language defines a bunch of rules that determine the memory layout of the composite type. While the actual rules can be a bit complicated, it is sufficient to understand the basic principle, for which we will look at the following composite datatype:

struct Composite {

int i1;

double d1;

void* ptr1;

}

The memory layout consists of two pieces of information: The size of the type and its alignment. The size determines how many bytes a single instance of the type requires. Alignment is a bit more special and refers to the numbers of bytes between two successive addresses at which an object of this type can be allocated. The alignment is always a non-negative power of two. Size and alignment are related to each other, as we will see shortly, and this relationship makes things complicated when figuring out how many bytes an instance of a type requires. A simple (but incorrect) formula for the size of a composite type is the sum of the size of each of its members. For our Composite type, this would mean the size of int plus the size of double plus the size of void*. These are all primitive types, so we can look up their size in the C++ standard. The standard only defines lower bounds for the size, but in practice there are a handful of accepted values for all major platforms. Disregarding the old Win16 API, the three major conventions are called ILP32 (4/4/4), LLP64 (4/8/8), and LP64 (4/8/8). The numbers refer to the size of an int, long, and pointer respectively. ILP32 is the default target for all 32-bit Unix systems (Linux, macOS) as well as the Win32 API. LLP64 is the Win32 API on 64-bit systems, and LP64 is 64-bit Unix. Let's stick with LP64 for now, which gives us the following

- Size of

int: 4 bytes - Size of

double: 8 bytes (typically refers to the 64-bit type in IEEE-754 floating point standard) - Size of

void*: 8 bytes (since it is a pointer)

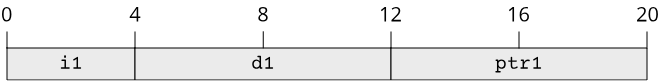

Our naive algorithm would thus give Composite a size of 20 bytes. The memory layout of this type would therefore consist of 4 bytes for the i1 member, followed by 8 bytes for the d1 member and another 8 bytes for the ptr1 member:

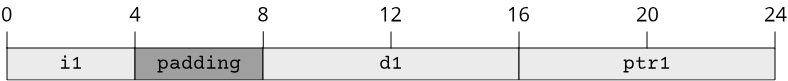

In comes the alignment. Alignment is important because certain CPU instructions require that the data that they operate on is aligned to a specific power of two, which is to say the memory address on which the instruction operates is divisible by that power of two. The x86-64 instruction set is a bit more lenient and allows non-aligned accesses (with a performance penalty), others, such as the ARM instruction set, require correct alignment and will otherwise raise a CPU error! 64-bit floating point operations typically require 64-bit (8-byte) alignment, so our double value has to be 64-bit aligned! Suppose our variable of type Composite starts at byte 0 (which is correctly aligned, as 0 is divisible by 8). The offset of the d1 member within Composite is 4 bytes, so d1 would have the address 4, which is not divisible by 8. This would violate alignment!

To guarantee correct alignment for all members of a composite type, the compiler is allowed to introduce padding bytes, which are unused bytes within the type that do not belong to any member. In the case of the Composite type, the compiler might insert four padding bytes after the i1 member, but before the d1 member. This will guarantee that both d1 and ptr1 will always be correctly aligned, so long as the instance of the type starts at a correctly aligned address. The corrected memory layout thus will look like this:

There are some ways to control the amount of padding in C++, typically through compiler intrinsics. For example to prevent any padding bytes from being inserted, on GCC one would use __attribute__ ((packed)) or the equivalent #pragma pack(1) on MSVC.

Rust has its own set of rules for type memory layout, which are explained in the documentation. By default, the Rust compiler will respect the alignment of all type members, though the default memory layout (called the Rust representation) is implementation-defined. There are options to use a memory layout compatible with C, or to specify alignment directly.

The difference between memory allocation and memory initialization

Before looking at ways to make our life easier as programmers, we quickly have to go over the difference between malloc/free in C and new/delete in C++. Simply put: malloc allocates memory, but it does not initialize it, which is why in C you will often see code that first calls malloc to obtain a pointer, and then writes some values to the memory pointed to by this pointer. In C++, classes exist, from which objects can be created. If you create an object, you would like it to be in a usable state immediately after creation, which is what the constructor is for in C++. If you create a new object on the stack, you call the constructor and the compiler figures out where in memory to put your object:

struct Obj {

int v1;

float v2;

};

int main() {

Obj obj = Obj(); //This is the constructor call right here: Obj()

return 0;

}

The variable declaration (Obj obj) is what allocates memory on the stack, the constructor call (Obj()) is what writes values to that memory.

new is nothing more than the way to express within C++ that you want that exact same object, but allocated in a dynamic memory region on the heap instead of on the stack:

struct Obj {

int v1;

float v2;

};

int main() {

auto obj = new Obj(); //Almost the same syntax, the constructor call is still there!

return 0;

}

It is worth pointing out that in this second example, there are actually 2 memory allocations: One on the heap through new, and one on the stack, because new returns a pointer to the allocated memory block on the heap, and we store this pointer in a variable on the stack! We could do new Obj(); instead of auto obj = new Obj(); and would only get the heap allocation, but then we would never be able to free the memory allocated with new because we never memorized the address of the allocation.

Objects that manage memory

Besides constructors, C++ also has destructors, which manage how to destroy an object. Here is the neat thing: The C++ language ensures that the destructor of an object is automatically called when the object goes out of scope. So just as stack memory is automatically cleaned up when we exit a function scope, destructors are called for all objects that live within that function scope:

#include <iostream>

struct Obj {

int v1;

float v2;

Obj() {

std::cout << "Constructor called\n";

}

~Obj() {

std::cout << "Destructor called\n";

}

};

int main() {

auto obj = Obj(); //<-- Object lifetime starts here with constructor call

return 0;

} //<-- Object lifetime ends here with *automatic* destructor call

This fundamental property of the C++ language has a name: Resource acquisition is initialization, or RAII for short. The name is unintuitive, but the concept behind it is immensely powerful and one of the key features that set C++ apart from other programming languages. Rust also supports RAII and makes heavy use of it.

With RAII, we can exploit the fact that we get an automated clean-up mechanism to make dynamic memory management easier. Think about our growable array that we wanted to write. If we use dynamic memory allocation to make it work, we better make sure that whoever is using this growable array does not have to worry about the internal memory management that is going on. We can do this by writing our growable object as a class which frees its allocated memory in its destructor:

This vector implementation is far from perfect, but what it does is it encapsulates the dynamic memory allocation and makes sure that whoever uses this vector never has to use one of the dynamic memory allocation routines themselves. All allocations happen automatically, and once the vec variable goes out of scope at the end of main, all allocated memory is released again. And even better: The dynamic memory management is done in a way that is just as efficient as if we had written everything by hand! As systems programmers, we love these kinds of abstractions: Fast code, but much nicer to read and write, and very safe as well!

A key takeaway from this section is that the RAII mechanism is so powerful because it is automatic. We never really have memory management problems when only using stack-based memory management (i.e. local variables), so we try to wrap dynamic memory allocations in types that can be used using stack-based memory management. This is what the rest of this chapter is all about!

Recap

Try answering the following questions for yourself:

- What exactly is alignment in the context of memory? Why is it necessary?

- What is the difference between

mallocandnewin C++? Why do we need both?