Preface

This book serves as the script for the lecture 'Advanced Systems Programming' taught at the University of Applied Sciences in Darmstadt. 'Advanced Systems Programming' is part of the curriculum of the computer science bachelor degree course. It is aimed at undergrad students in their last couple of semesters and is an optional course. Prerequisites are the basic 'Introduction to Programming' courses (PAD1&2) as well as the introductory course on operating systems (Betriebssysteme).

What is Advanced Systems Programming about?

The main focus of the Advanced Systems Programming course and this book is to teach you how to develop systems software using a modern programming language (Rust). Within this course, you will learn the basics of what systems programming is with many hands-on examples and exercises to get them familiar with the modern systems programming language Rust. You are expected to have basic knowledge of C++, so one of the cornerstones of this course is the continuous comparison of common aspects of systems software in the C++-World and the Rust-World. These aspects include:

- Data (memory and files, ownership)

- Runtime performance (zero-overhead abstractions, what makes C++ & Rust fast or slow?)

- Error Handling

- Communication between processes and applications

- Concurrency as a basic building block of systems software

- The development ecosystem, specifically Rust's

cargoand how it unifies compilation, deployment, configuration, testing, benchmarking etc.

For students taking the ASP course, hands-on experience with these topics is gained in the extended lab part, where they will implement the learned aspects within real systems software. In the first part of the lab, this is done through a series of exercises, which include translating concepts from C++ code to the Rust world and extending small programs with new features. The second part of the lab will be a larger project in which students will implement a system of their choice (for example a web-server, game-engine, or a compiler for a scripting language) using the concepts that they have learned in this course. All lab sessions will also include a small analysis of an existing piece of (open-source) software. This way, students will familiarize themselves with reading foreign code and will get experience from real-world code.

What is Advanced Systems Programming not about?

Systems programming is a very large discipline that covers areas from all over the computer science field. As such, many of the concepts that you will learn and use in this course might warrant a course on their own (and sometimes already have a course on their own). As such, Advanced Systems Programming is not a course that goes into the cutting edge research of how to write blazing fast code on a supercomputer or writing an operating system from scratch. While this course does cover many details that are relevant to systems programming, it is much more focused on the big picture than a deep-dive into high-performance software.

Additionally, while this course uses Rust as its main programming language, it will not cover all features that Rust has to offer, nor will it teach all Rust concepts from the ground up in isolation. Rust is well known for its outstanding documentation and you are expected to use this documentation, in particular the Rust book, to extend your knowledge of Rust beyond what is covered in this course. This course also is not a tutorial on how to use Rust. You will learn systems programming using Rust, but Rust as a general-purpose language has many more areas of application than just systems programming. A lot of focus on the practical aspects of using the Rust language will be put in the lab part of this course, as it is the authors firm believe that one learns programming by doing, and not so much by reading.

How to use this book?

This book is meant to be used as the backing material for the lecture series. In principle, all information covered in the lectures can be found within this book (disregarding spontaneous disussions and questions that might arise during a lecture). Depending on your learning style, this book can serve as a reference for the things discussed in the lecture, or can be used for self-study. It does not replace participation in the lab part of the course. Gaining hands-on experience is at the core of this lecture series, so attendence of the lab is mandatory.

Besides a lot of text, throughout this book you will find several exercises, which range from conceptual questions to small programming tasks. These exercises are meant to recap the learned material and sometimes to do some further research on your own. With an exception to the open-ended questions, all exercises do provide solutions hidden behind a collapsible UI element, like so:

Additionally, this book contains many code examples, which look like this:

fn main() { println!("Hello Rust"); }

Whenever applicable, you can run the code example using the play button on the top-right of the code panel. Rust code is run using the Rust playground.

Supplementary material

This book comes with lots of code examples throughout the text. In addition, the following text-books are a good start for diving into the concepts taught in this course:

- Bryant, Randal E., O'Hallaron David Richard, and O'Hallaron David Richard. Computer systems: a programmer's perspective. Vol. 2. Upper Saddle River: Prentice Hall, 2003. {{#cite Bryant03}}

- Klabnik, Steve, and Carol Nichols. The Rust Programming Language (Covers Rust 2018). No Starch Press, 2019. {{#cite Klabnik19}}

- Also available online: https://doc.rust-lang.org/book/

- Stroustrup, Bjarne. "The C++ programming language." (2013). {{#cite Stroustrup00}}

An comprehensive list of references can be found at the end of this book.

1. Introduction to Systems Programming

In this chapter, we will understand what Systems Programming is all about. We will get an understanding of the main concepts that make a piece of software 'systems software' and which programming language features help in developing systems software. We will classify programming languages by their ability to be used in systems programming and will take a first look at a modern systems programming language called Rust.

1.1 What is systems programming?

The term systems programming is a somewhat losely defined term for which several definitions can be found. Here are some definitions from the literature:

- "writing code that directly uses hardware resources, has serious resource constraints, or closely interacts with code that does" ("The C++ programming language", Stroustrup)

- "“systems-level” work [...] deals with low-level details of memory management, data representation, and concurrency" ("The Rust Programming Language", Steve Klabnik and Carol Nichols)

- "a systems programming language is used to construct software systems that control underlying computer hardware and to provide software platforms that are used by higher level application programming languages used to build applications and services" (from a panel on systems programming at the Lang.NEXT 2014 conference)

- "Applications software comprises programs designed for an end user, such as word processors, database systems, and spreadsheet programs. Systems software includes compilers, loaders, linkers, and debuggers." (Vangie Beal, from a 1996 blog post)

From these definitions, we can see that a couple of aspects seem to be important in systems programming:

- Systems programming interacts closely with the hardware

- Systems programming is concerned with writing efficient software

- Systems software is software that is used by other software, as opposed to applications software, which is used by the end-user

These definitions are still a bit ambiguous, especially the third one. Take a database as an example: A user might interact with a database to store and retrieve their data manually. At the same time, a database might be accessed from other software, such as the backend of a web-application. Most databases are developed with efficiency and performance in mind, so that they can perform well even under high load and with billions of entries. Is a database an application or systems software then?

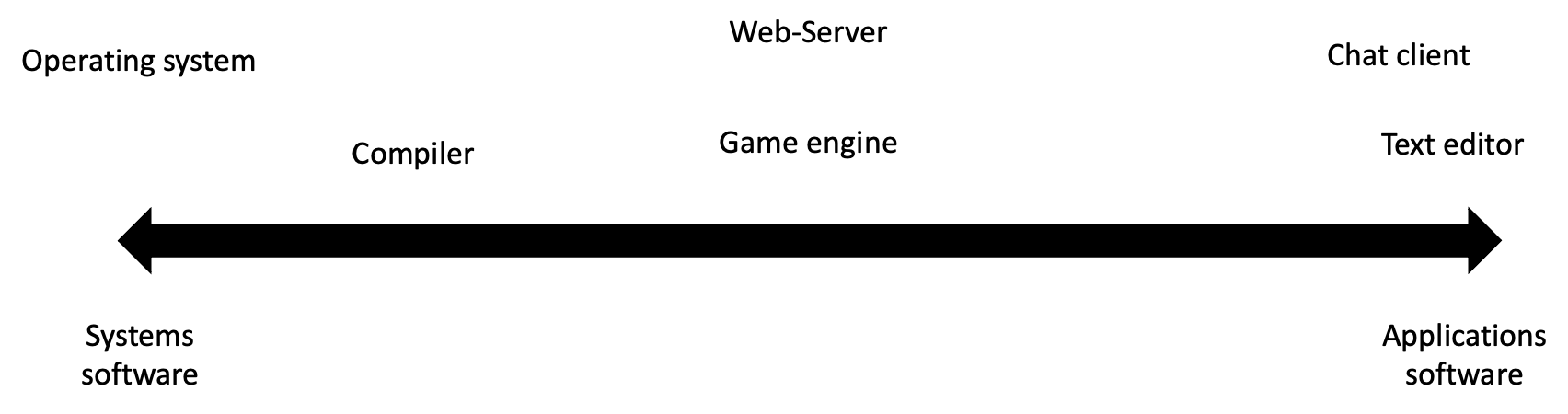

For this course, instead of seeing systems programming and application programming as two disjunct domains, we will instead think of them as two ends of a spectrum. We will see that most software falls somewhere on this spectrum, with aspects from systems programming and aspects from application programming. For the sake of completeness, here are some types of software that will be considered systems software in the context of this course:

- Operating systems (perhaps the prime example of systems software. It even has system in the name!)

- Web-Servers

- Compilers and Debuggers

- Game Engines

🔤 Exercise 1.1.1

Take a look at the software running on your computer. Pick 5 programs and put them onto the systems programming/application programming scale. Justify your decisions using the criteria for systems programming that you learned in this section.

💡 Need help? Click here

| Software | Systems Programming (0) vs. Applications Programming (5) | Reasons |

|---|---|---|

| Browser | 2 | High performance and security demands, needs to run JavaScript code (and potentially WASM, WebGL etc.) |

| Terminal | 3 | Seems low-level, but most of the heavy lifting is done by the OS, making the terminal more of a GUI for typing commands and displaying text |

| Mail Client | 5 | Not particularily resource-constrained, needs to make some network calls and maybe uses a database internally, but mostly this is a user-facing GUI application |

systemd (on Linux) | 0 | Its main purpose is to control other software, and it interacts closely with the OS |

| IDE (e.g. VS Code) | 4 | A fancy text editor that acts as a GUI for all the actual development components (compiler, linker etc.) |

1.2 How do we write systems software?

To write software, we use programming languages. From the multitude of programming languages in use today, you will find that not every programming language is equally well suited for writing the same types of software. A language such as JavaScript might be more suited for writing client-facing software and can thus be considered an application programming language. In contrast, a language like C, which provides access to the underlying hardware, will be more suited for writing systems software and thus can be considered a systems programming language. In practice, most modern languages can be used for a multitude of tasks, which is why you will often find the term general-purpose programming language being used.

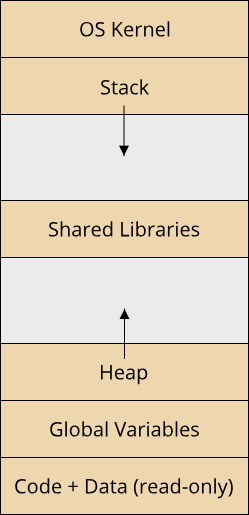

An important aspect that makes some languages ill-suited for writing systems software under our definition is the ability to access the computers hardware resources directly. Examples of hardware resources are:

- Memory (working memory and disk memory)

- CPU cycles (both on a single logical core and on multiple logical cores)

- Network throughput

- GPU (Graphics Processing Unit) cycles

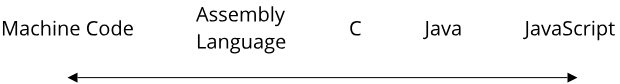

Based on these hardware resources, we can classify programming languages by their ability to directly manage access to these resources. This leads us to the often-used terms of low-level and high-level programming languages. Again, there is no clear definition of what constitutes a low-level or high-level programming language, and indeed the usage of these terms has changed over the last decades. Here are two ways of defining these terms:

- Definition 1) The level of a programming language describes the level of abstraction over a machines underlying hardware architecture

- Definition 2) A low-level programming language gives the programmer direct access to hardware resources, a high-level programming language hides these details from the programmer

Both definitions are strongly related and deal with hardware and abstractions. In the context of computer science, abstraction refers to the process of hiding information in order to simplify interaction with a system (Colburn, T., Shute, G. Abstraction in Computer Science. Minds & Machines 17, 169–184 (2007). https://doi.org/10.1007/s11023-007-9061-7). Modern computers are extremely sophisticated, complex machines. Working with the actual hardware in full detail would include a massive amount of information that the programmer needs to know about the underlying system, making even simple tasks very time-consuming. All modern languages, even the ones that can be considered fairly low-level, thus use some form of abstraction over the underlying hardware. As abstraction is information hiding, there is the possibility of a loss of control when using abstractions. This can happen if the abstraction hides information necessary to achieve a specific task. Let's look at an example:

The Java programming language can be considered fairly high-level. It provides a unified abstraction of the systems hardware architecture called the Java Virtual Machine (JVM). One part of the JVM is concerned with providing the programmer access to working memory. It uses a high degree of abstraction: Memory can be allocated in a general manner by the programmer, unused memory is automatically detected and cleaned up through a garbage collector. This makes the process of allocating and using working memory quite simple in Java, but takes the possibility of specifying exactly where, when and how memory is allocated and released away from the user. The C programming language does not employ a garbage collector and instead requires the programmer to manually release all allocated memory once it is no longer used. Under this set of features and our two definitions of a programming language's level, we can consider Java a more high-level programming language than C.

Here is one more example to illustrate that this concept applies to other hardware resources as well:

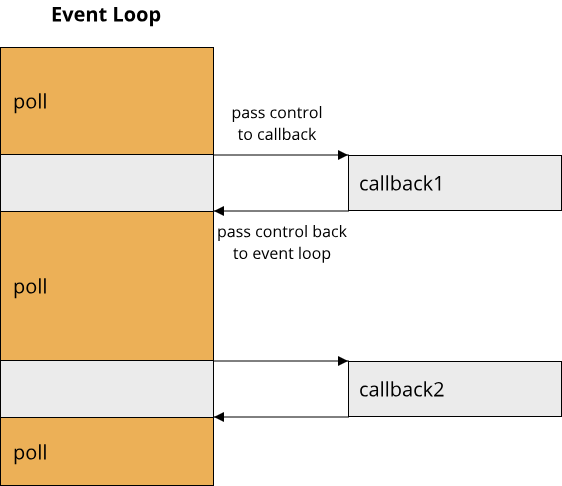

The JavaScript programming language is an event-driven programming language. One of its main points of application is the development of client-side behaviour of web pages. Here, the JavaScript code is executed by another program (your browser), which controls the execution flow of the JavaScript program through something called an event-loop. Without going into detail on how this event-loop works, it enforces a sequential execution model for JavaScript code. This means that in pure JavaScript, no two pieces of code can be executed at the same time. Now take a look at the Java programming language. It provides a simple abstraction for running multiple pieces of code in parallel called a Thread. Through threads, the programmer effectively gains access to multiple CPU cores as a resource. In practice, many of the details are still managed by the JVM and the operating system, but given our initial definitions, we can consider JavaScript a more high-level programming language than Java.

Similar to our classification of software on a scale from systems to applications, we can put programming languages on a scale from low-level to high-level:

🔤 Exercise 1.2.1

Given the programming languages Python, C++, Haskell, Kotlin and Assembly language, sort them onto the scale from low-level to high-level programming languages. What can you say about the relationship between Kotlin and Java? How about the relationship between C and C++? Where would you put Haskell on this scale, and why?

💡 Need help? Click here

| Language | Low-level (0) vs. High-level (5) | Reasons |

|---|---|---|

| Python | 5 | Interpreted, dynamically typed, garbage collected (i.e. no manual memory management) |

| C++ | 2 (above C) | Compiled, statically typed, manual memory management. Syntactically more dense than C, but provides more abstractions, so a bit more high-level than C |

| Haskell | 5 (above Python) | Purely functional language with zero imperative programming constructs, making it very far removed from the actual hardware |

| Kotlin | 4 (above Java) | Runs on the JVM, so similar power to Java (i.e. limited manual memory management constructs), but incorporates more functional programming abstractions, making it more high-level than Java |

| Assembly Language | 1 | Almost as low-level as it gets, though there are undocumented CPU instructions not expressible in assembly language |

Now that we have learned about low-level and high-level programming languages, it becomes clear that more low-level programming languages will provide us with better means for writing good systems software that makes efficient use of hardware resources. At the same time, the most low-level programming languages will be missing some abstractions that we would like to have in order to make the process of writing systems software efficient. For the longest time, C and C++ thus were the two main programming languages used for writing systems software, which also shows in their popularity. These are powerful, very well established languages which, despite their considerable age (C being developed in the early 1970s, C++ in the mid 1980s), still continue to be relevant today. At the same time, over the last decade new systems programming languages have emerged, such as Rust and Go. These languages aim to improve some shortcomings of their predecessors, such as memory safety or simple concurrent programming, while at the same time maintaining a level of control over the hardware that makes them well-suited for systems programming.

This course takes the deliberate decision to focus on Rust as a modern systems programming language in contrast to a well-established language such as C++. While no one of the two languages is clearly superior to the other, Rust does adress some shortcomings in C++ in terms of memory safety and safe concurrent programming that can make writing good systems software easier. Rust also has gained a lot of popularity over the last couple of years, continuingly scoring as the most loved programming language in the StackOverflow programmers survey. In addition, Rust's excellent tooling makes it very well suited for a lecture series, as getting some Rust code up and running is very simple.

At the same time, this course assumes that the students are familiar with C++, as it will make continuous references to C++ features important in systems programming and compare them to Rust's approach on systems programming.

1.3 Systems programming features in a nutshell

In this course, we will learn the most important concepts when writing systems software and apply them to a series of real-world problems. The features covered in this book are:

- A general introduction to Rust and its concepts compared to C++ (Chapter 2)

- The fundamentals of memory management and memory safety (Chapter 3)

- Zero-overhead abstractions - Writing fast, readable and maintainable code (Chapter 4)

- Error handling - How to make systems software robust (Chapter 5)

- Systems level I/O - How to make systems talk to each other (Chapter 6)

- Fearless concurrency - Using compute resources effectively (Chapter 7)

- Performance - How to measure, evaluate and tweak systems performance (Chapter 8)

2. A general introduction to Rust and its concepts compared to C++

The Rust programming language is an ahead-of-time compiled, multi-paradigm programming language that is statically typed, uses borrow checking for enforcing memory safety and supports ad-hoc polymorphism through traits, among other features.

This definition is quite the mouthful of fancy words. In this chapter, we will try to understand the basics of what these features mean, why they are useful in systems programming, and how they relate to similar features present in C++. We will dive deeper into some of Rusts features in the later chapters, however we will always do so by keeping the systems programming aspect in mind. The Rust book does a fantastic job at teaching the Rust programming language in general, so refer to it whenever you want to know more about a specific Rust feature or are confused about a piece of syntax or a specific term.

Let's start unpacking the complicated statement above! There are several highlighted concepts in this sentence:

- ahead-of-time compiled

- multi-paradigm

- statically-typed

- borrow checking

- ad-hoc polymorphism

- traits

Over the next sections, we will look at each of these keywords together with code examples to understand what they mean and how they relate to systems programming.

2.1. Rust as an ahead-of-time compiled language

In this chapter, we will learn about compiled languages and why they are often used for systems programming. We will see that both Rust and C++ are what is known as ahead-of-time compiled languages. We will learn the benefits of ahead-of-time compilation, in particular in terms of program optimization, and also its drawbacks, specifically problems with cross-plattform development and the impact of long compilation times.

Programming languages were invented as a tool for developers to simplify the process of giving instructions to a computer. As such, a programming language can be seen as an abstraction over the actual machine that the code will be executed on. As we have seen in chapter 1.2, programming languages can be classified by their level of abstraction over the underlying hardware. Since ultimately, every program has to be executed on the actual hardware, and the hardware has a fixed set of instructions that it can execute, code written in a programming language has to be translated into hardware instructions at some point prior to execution. This process is known as compilation and the associated tools that perform this translation are called compilers. Any language whose code is executed directly on the underlying hardware is called a compiled languageThe exception to this are assembly languages, which are used to write raw machine instructions. Code in an assembly language is not compiled but rather assembled into an object file which your computer can execute..

At this point, one might wonder if it is possible to execute code on anything else but the underlying hardware of a computer system. To answer this question, it is worth doing a small detour into the domain of theoretical computer science, to gain a better understanding of what code actually is.

Detour - The birth of computer science and the nature of code

In the early 20th century, mathematicians were trying to understand the nature of computation. It had become quite clear that computation was some systematic process with a set of rules whose application enabled humans to solve certain kinds of problems. Basic arithmetic operations, such as addition, subtraction or multiplication, were known to be solvable through applying a set of operations by ancient Babylonian mathematicians. Over time, specific sets of operations for solving several popular problems became known, however it remained unclear whether any possible problem that can be stated mathematically could also be solved through applying a series of well-defined operations. In modern terms, this systematic application of a set of rules is known as an algorithm. The question that vexed early 20th century mathematicians could thus be simplified as:

"For every possible mathematical problem, does there exist an algorithm that solves this problem?"

Finding an answer to this question seems very rewarding. Just imagine for a moment that you were able to show that there exists a systematic set of rules that, when applied, gives you the answer to every possible (mathematical) problem. That does sound very intruiging, does it not?

The main challenge in answering this question was the formalization of what it means to 'solve a mathematical problem', which can be stated as the simple question:

"What is computation?"

In the 1930s, several independent results were published which tried to give an answer to this question. Perhaps the most famous one was given by Alan Turing in 1936 with what is now know as a Turing machine. Turing was able to formalize the process of computation by breaking it down to a series of very simple instructions operating on an abstract machine. A Turing machine can be pictured as an infinitely long tape with a writing head that can manipulate symbols on the tape. The symbols are stored in cells on the tape and the writing head always reads the symbol at the current cell. Based on the current symbol, the writing head writes a specific symbol to the current cell, then moves one step to the left or right. Turing was able to show that this outstandingly simple machine is able to perform any calculation that could be performed by a human on paper or any other arbitrarily complex machine. This statement is now known as the Church-Turing thesis.

So how is this relevant to todays computers, programming languages and systems programming? Turing introduced two important concepts: A set of instructions ("code") to be executed on an abstract machine ("computer"). This definition holds until today, where we refer to code as simply any set of instructions that can be executed on some abstract machine.

It pays off to think about what might constitute such an 'abstract computing machine'. Processors of course come to mind, as they are what we use to run our instructions on. However, humans can also be considered as 'abstract computing machines' in a sense. Whenever we are doing calculations by hand, we apply the same principal of systematic execution of instructions. Indeed, the term computer historically refered to people carrying out computations by hand.

Interpreted languages

Armed with our new knowledge about the history of computers and code, we can now answer our initial question: Is it possible to execute code on anything else but the underlying hardware of a computer system? We already gave the answer in the previous section where we saw that there are many different types of abstract machines that can carry out instructions. From a programmers point of view, there is one category of abstract machine that is worth closer investigation, which is that of emulation.

Consider a C++ program that simulates the rules of a Turing machine. It takes a string of input characters, maybe through the command line, and applies the rules of a Turing machine to this input. This program itself can now be seen as a new abstract machine executing instructions. Instead of executing the rules of a Turing machine on a real physical Turing machine, the rules are executed on the processors of your computer. Your computer thus emulates a Turing machine. Through emulation, computer programs can themselves become abstract machines that can execute instructions. There is a whole category of programming languages that utilize this principle, called interpreted programming languagesWhy interpreted and not emulated? Read on!.

In an interpreted programming language, code is fed to an interpreter, which is a program that parses the code and executes the relevant instructions on the fly. This has some advantages over compiled languages, in particular independence of the underlying hardware and the ability to immediately execute code without waiting on a possibly time-consuming compilation process. At the same time, interpreted languages are often significantly slower than compiled languages because of the overhead of the interpreter and less potential for optimizing the written code ahead of time.

As we have seen, many concepts in computer science are not as binary (pun intended) as they first appear. The concept of interpreted vs. compiled languages is no different: There are interpreted languages which still use a form of compilation to first convert the code into an optimized format for the interpreter, as well as languages which defer the compilation process to the runtime in a process called just-in-time compilation (JIT).

Why ahead-of-time compiled languages are great for systems programming

As one of the main goals of systems programming is to write software that makes efficient use of computer resources, most systems programming is typically done with ahead-of-time compiled languages. There are two reasons for this:

- First, ahead-of-time compiled languages get translated into machine code for specific hardware architectures. This removes any overhead of potential interpretation of code, as the code runs 'close to the metal', i.e. directly on the underlying hardware.

- Second, ahead-of-time compilation enables automatic program optimization through a compiler. Modern compilers are very sophisticated programs that know the innards of various hardware architectures and thus can perform a wide range of optimizations that can make the code substantially faster than what was written by the programmer.

- A third benefit that is related to ahead-of-time compilation is that we can perform correctness checks on the program, more on that in chapter 2.4

This second part - program optimization through the compiler - plays a big role in systems programming. Of course we want our code to be as efficient as possible, however depending on the way we write our code, we might prevent the compiler from applying optimizations that it otherwise could apply. Understanding what typical optimizations are will help us understand how we can write code that is more favorable for the compiler to optimize.

We will now look at how ahead-of-time compilation works in practice, using Rust as an example.

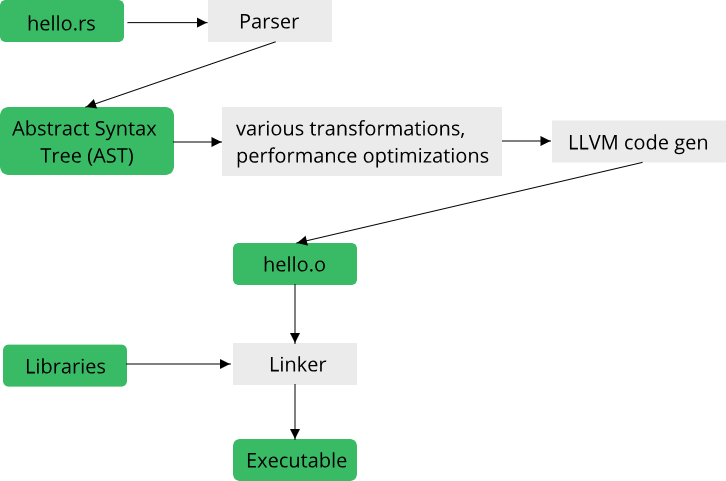

The Rust programming language is an ahead-of-time compiled language built on top of the LLVM compiler project.

The Rust toolchain includes a compiler, rustc, which converts Rust code into machine code, similar to how compilers such as gcc, clang or MSVC convert C and C++ code into machine code. While the study of the Rust compiler (and compilers in general) is beyond the scope of this course, it pays off to get a basic understanding of the process of compiling Rust code into an executable programWe will use the term executable code to refer to any code that can be run on an abstract machine without further preprocessing. The other usage of the term executable is for an executable file and to distinguish it from a library, which is a file that contains executable code, but can't be executed on its own..

When you install Rust, you will get access to cargo, the build system and package manager for Rust. Most of our interaction with the Rust toolchain will be through cargo, which acts as an overseer for many of the other tools in the Rust ecosystem. Most importantly for now, cargo controls the Rust compiler, rustc. You can of course invoke rustc yourself, generally though we will resort to calls such as cargo build for invoking the compiler. Suppose you have written some rust code in a file called hello.rs (.rs is the extension for files containing Rust code) and you run rustc on this file to convert it into an executable. The following image gives an overview of the process of compiling your source file hello.rs into an executable:

Your code in its textual representation is first parsed and converted into an abstract syntax tree (AST). This is essentially a data structure that the represents your program in a way that the compiler can easily work with. From there, a bunch of transformations and optimizations are applied to the code, which is then ultimately fed into the LLVM code generator, which is responsible for converting your code into something that your CPU understands. The output of this stage are called object files, as indicated by the .o file extension (or .obj on Windows). Your code might reference other code written by other people, in the form of libraries. Combining your code with the libraries into an executable is the job of the linker.

The Rust compiler (and most other compilers that you will find in the wild) has three main tasks:

-

- It enforces the rules of the programming language. This checks that your code adheres to the syntax and semantics of the programing language

-

- It converts the programming language constructs and syntax into a series of instructions that your CPU can execute (called machine code)

-

- It performs optimizations on the source code to make your program run faster

The only mandatory stage for any compiler is stage 2, the conversion of source code into executable instructions. Stage 1 tends to follow as a direct consequence of the task of stage 2: In order to know how to convert source code into executable instructions, every programming language has to define some syntax (things like if, for, while etc.) which dictates the rules of this conversion. In that regard, programming language syntax is nothing more than a list of rules that define which piece of source code maps to which type of runtime behaviour (loops, conditionals, call statements etc.). In applying these rules, compilers implicitly enforce the rules of the programming language, thus implementing stage 1.

The last stage - program optimization - is not necessary, however it has become clear that this is a stage which, if implemented, makes the compiler immensely more powerful. Recall what we have learned about abstractions in chapter 1: Abstraction is information-hiding. A programming language is an abstraction for computation, an abstraction which might be missing some crucial information that would improve performance. Because compilers can optimize our written code, we can thus gain back some information (albeit only indirectly) that was lost in the abstraction. In practice this means we can write code at a fairly high level of abstraction and can count on the compiler to fill in the missing information to make our code run efficiently on the target hardware. While this process is far from perfect, we will see that it can lead to substantial performance improvements in many situations.

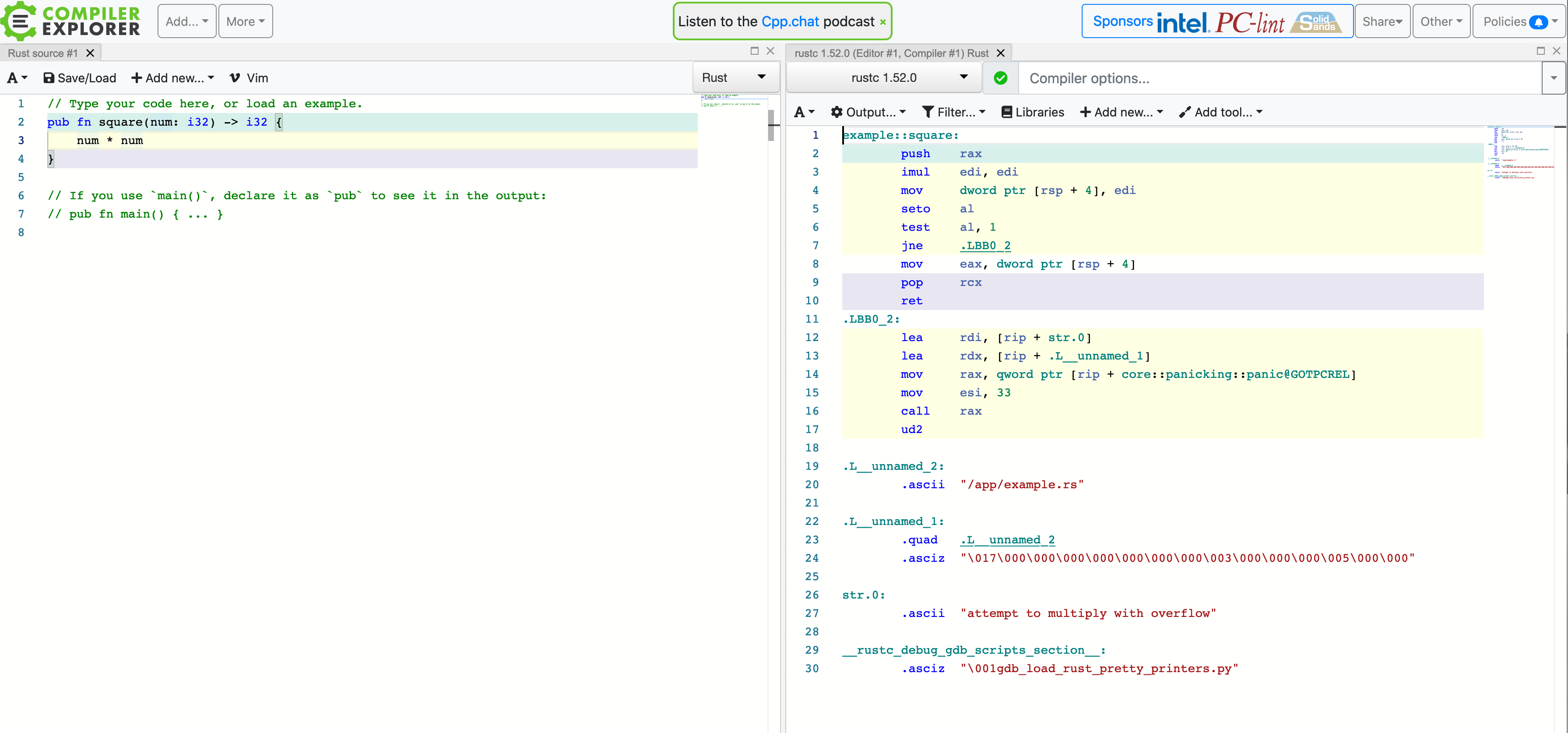

Compiler Explorer: A handy tool to understand compiler optimizations

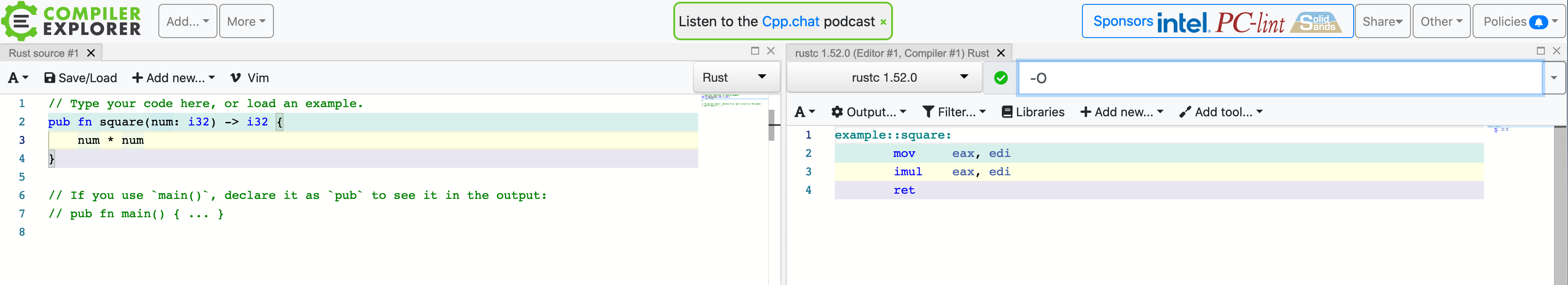

When studying systems programming, it can sometimes be helpful to understand just what exactly the compiler did to your source code. While it is possible to get your compiler to output annotated assembly code (which shows the machine-level instructions that your code was converted into), doing this by hand can become quite tedious, especially in a bigger project where you might only be interested in just a single function. Luckily, there exists a tremendously helpful tool called Compiler Explorer which we will use throughout this course. Compiler Explorer is a web-application that lets you input code in one of several programming languages (including C++ and Rust) and immediately shows you the corresponding compiler output for that code. It effectively automates the process of calling the compiler with the right arguments by hand and instead gives us a nice user interface to work with. Here is what Compiler Explorer looks like:

On the left you input your code, choose the appropriate programming language and on the right you will find the compiler output for that piece of code. You can select which compiler to use, and provide arguments to the compiler. Here is an example of the same piece of code, compiled with the compiler argument -O, which tells the compiler to optimize the code:

Notice how much less code there is once the compiler applied its optimizations! We will use Compiler Explorer whenever we want to understand how the compiler acts on our code.

The dark side of compilers

While compilers are these quasi-magical tools that take our code and convert it into an efficient machine-level representation, there are also downsides to using ahead-of-time compiled languages. Perhaps the largest downside is the time that it takes to compile code. All these transformations and optimizations that a compiler performs can be quite complex. A compiler is nothing more than a program itself, so it is bound by the same rules as any program, namely: Complex processes take time. For sophisticated programming languages, such as Rust or C++, compiling even moderately-sized projects can take minutes, with some of the largest projects taking hours to compile. Compared to interpreted languages such as Python or JavaScript, where code can be run almost instantaneuously, this difference in time for a single "write-code-then-run-it" iteration is what caused the notion of compiled languages being 'less productive' than interpreted languages.

Looking at Rust, fast compilation times are not one of its strong sides, unfortunately. You will find plenty of criticism for Rust due to its sometimes very poor compile times, as well as lots of tips on how to reduce compile times.

At the same time, most of the criticism deals with the speed of "clean builds", that is compiling all code from scratch. Most compilers support what is know as incremental builds, where only those files which have changed since the last compilation are recompiled. In practice, this makes working with ahead-of-time compiled languages quite fast. Nonetheless, it is worth keeping compilation times in mind and, when necessary, adapt your code once things get out of hand.

Recap

In this section, we learned about the difference between compiled languages and interpreted languages. Based on a historical example, the Turing machine, we learned about the concept of abstract machines which can execute code. We saw that for systems programming, it is benefitial to have an abstract machine that matches the underlying hardware closely, to get fine-grained control over the hardware and to achieve good performance. We learned about compilers, which are tools that translate source code from one language into executable code for an abstract machine, and looked at one specific compiler, rustc, for the Rust programming language. Lastly, we learned that the process of compiling code can quite slow due to the various transformations and optimizations that a compiler might apply to source code.

In the next chapter, we will look more closely at the Rust programming language and learn about its major features. Here, we will learn the concept of language paradigms.

2.2. Rust as a multi-paradigm language

In this chapter, we will look more closely at the Rust programming language and its high-level features. We will do some comparisons between Rust code and C++ code, for now purely from a theoretical perspective. In the process, we will learn about programming paradigms, which often define the way one writes code in a programming language.

Why different programming languages?

There are a myriad of different programming languages available today. As of 2021, Wikipedia lists almost 700 unique programming languages, stating that these are only the "notable programming languages". With such an abundance in programming languages that a programmer can choose from, it is only natural that questions such as "What is the best programming language?" arise. Such questions are of course highly subjective, and discussions whether programming language A is superior to programming language B are often fought with almost religious zeal. Perhaps the better question to ask instead is: "Why do so many different programming languages exist?"

🔤 Exercise 2.2.1

List the programming languages that you know of, as well as the ones that you have already written some code in. If you have written code in more than one language, which language did you prefer, and why? Can you come up with some metrics that can be used to assess the quality of a programming language?

💡 Need help? Click here

C++ is often seen as "hard" due to its many language features, few guardrails and a complex standard. At the same time, it is seen as very fast, meaning the programs written with C++ can be high-performance programs. For actual quality criteria that we can deduce from that, read on!

One possible approach to understand why there are so many different programming languages is to view a programming language like any other product that is available on the market. Take shoes as an analogy. There are lots of companies making shoes, and each company typically has many different product lines of shoes available. There are of course different types of shoes for different occasions, such as sneakers, slippers, winter boots or dress shoes. Even within each functional category, there are many different variations which will differ in price, style, material used etc. A way to classify shoes (or any product for that matter) is through quality criteria. Examples of quality criteria are:

- Performance

- Usability

- Reliability

- Look&Feel

- Cost

We can apply these quality criteria to programming languages as well and gain some insight into why there are so many programming languages. We will do so for the three interesting criteria Performance, Usability and Reliability.

Performance as a quality criterion for programming languages

Performance is one of the most interesting quality criteria when applying it to programming languages. There are two major ways to interpret the term performance here: One is the intuitive notion of "How fast are the programs that I can write with this programming language?". We talked a bit about this in section 1.2 when we learned about high-level and low-level programming languages. Another interpretation of performance is "What can I actually do with this programming language?" This question is interesting because there is a theoretical answer and a practical one, and both are equally valuable. The theoretical answers comes from the area of theoretical computer science (a bit obvious, isn't it?). Studying theoretical computer science is part of any decent undergraduate curriculum in computer science, as it deals with the more mathematical and formal aspects of computer science. Many of the underlying concepts that make modern programming languages so powerful are directly rooted in theoretical computer science, as we will see time and again in this course. To answer the question "What can I do with programming language X?", a theoretical computer scientist will ask you what abstract machine your programming language runs on. In the previous chapter, we learned about the Turing machine as an abstract machine, which incidentely is also the most powerful (conventional) abstract machine known to theoretical computer scientists (remember the Church-Turing-Thesis?).

If you can simulate a Turing machine in your programming language, you have shown that your programming language is as powerful (as capable) as any other computational model (disregarding some exotic, theoretical models). We call any such language a Turing-complete language. Most modern programming languages are Turing-complete, which is why you rarely encounter computational problems which you can solve with one popular programming language, but not with another. At least, this is the theoretical aspect of it. From a practical standpoint, there are many capabilities that one language might have that another language is lacking. The ability to directly access hardware resources is one example, as we saw in section 1.2. The whole reason why we chose Rust as a programming language in this course is that it has capabilities that for example the language Python does not have. In practice, many languages have mechanisms to interface with code written in other languages, to get access to certain capabilities that they themselves lack. This is called language interoperability or interop for shortThis might become part of a future chapter of this book. Stay tuned!. In this regard, if language A can interop with language B, they are in principle equivalent in terms of their capabilities (strictly speaking the capabilities of A form a superset of the capabilities of B). In practice, interop might introduce some performance overhead, makes code harder to debug and maintain and thus is not always the best solution. This leads us to the next quality criterion:

Usability of programming languages

Usability is an interesting criterion because it is highly subjective. While there are formal definitions for usability, even those definitions still involve a high degree of subjectiveness. The same is true for programming languages. The Python language has become one of the most popular programming languages over the last decade in part due to its great usability. It is easy to install, easy to learn and easy to write, which is why it is now seen as a great choice for the first programming language that one should learn when learning programming. We can define some aspects that make a language usable:

- Simplicity: How many concepts does the programmer have to memorize in order to effectively use the language?

- Conciseness: How much code does the programmer have to write for certain tasks?

- Familiarity: Does the language use standard terminology and syntax that is also used in other languages?

- Accessibility: How much effort is it to install the necessary tools and run your first program in the language?

You will see that not all of these aspects might have the same weight for everyone. If you start out learning programming, simplicity and accessibility might be the most important criteria for you. As an experienced programmer, you might look more for conciseness and familiarity instead.

🔤 Exercise 2.2.2

How would you rate the following programming languages in terms of simplicity, conciseness, familiarity and accessibility? Python, Java, C, C++, Rust, Haskell. If you don't know some of these languages, try to look up an introductory tutorial for them (you don't have to write any code) and make an educated guess.

💡 Need help? Click here

| Language | Simplicity | Conciseness | Familiarity | Accessibility |

|---|---|---|---|---|

| Python | High (easy to learn) | High (depending on the problem to solve, for algorithms less so than for data processing) | Medium-high (indentation for scoping is unusual, but has many wide-spread keywords) | High (installation is easy, often comes preinstalled on Linux systems, lots of resources for learning out there) |

| Java | Medium-high (object-oriented nature can feel cumbersome, but there is little arcane syntax) | Low (Java code is famous for being very verbose) | High (the syntax is similar enough to C/C++ in many aspects, almost identical to C#, and not too far removed from JavaScript, covering a wide range of popular languages) | High ("Java runs on over X billion devices" was a popular marketing slogan) |

| C | Medium (pointers can be hard to learn, but the language itself is small in terms of features) | Medium (not as much boilerplate as Java, but also not many features for expressing things in a more dense way) | Medium (perhaps the most used language historically, but if you come from Web development for example, C is very different) | Medium (the main selling point of C is that everything - as in every platform - supports C. The tooling is lacking however) |

| C++ | Low (C++ is famous for being overly complex and tripping even experts with arcane language details. Its growth over multiple decades has made the language bloated) | Low (the stronger type system compared to C means more typing for the same things) | Medium (the basic syntax is easy enough, templates complicate things) | Low (mainly due to the way C++ projects are structured, often requiring complex build systems that are hard to learn) |

| Rust | Low (hard learning curve due to the very strict type system) | Medium (arguably better than C++ due to more high-level abstractions and type inference, but still requires more typing to express certain things compared to e.g. Python) | Low (there are certain syntactic elements that are unusual for many programmers, such as match and lifetime annotations, but mostly the concepts of borrow checking and lifetimes themselves are unfamiliar to most programmers) | High (easy to install, some of the best tooling out there, outstanding documentation at least for the core parts of the language) |

| Haskell | Low (perhaps the most complicated language on the list, if only due to its close relation to mathematics) | Very high (a side-effect of being a functional language, often reading similar to mathematical statements) | Low (very unusual syntax for most programmers not used to functional programming) | Medium (simple enough to install and has decent tooling, but it is a niche programming language and as such resources are scarcer than for other languages) |

In the opinion of the author, Rust has an overall higher usability than most other systems programming languages due to its great tooling and documentation, which is the main reason why it was chosen for this course.

Reliability of programming languages

Reliability is a difficult criterion to assess in the context of programming languages. A programming language does not wear out with repeated use, as any physical product might. Instead, we might ask the question "How reliable are the programs that I write with this language?" This all boils down to "How easy is it to accidently introduce bugs to the code?", which is an exceedingly difficult question to answer. Bugs can take on a variety of forms and can have a myriad of origins. No programming language can reasonably expect to prevent all sorts of bugs, so we have to instead figure out what bugs can be prevented by using a specific programming language, and how the language deals with any errors that can't be prevented.

Generally, it makes sense to distinguish between logical errors caused by the programmer (directly or indirectly), and runtime errors, which can occur due to external circumstances. Examples of logical errors are:

- The usage of uninitialized variables

- Not enforcing assumptions (e.g. calling a function with a null-pointer without checking for the possibility of null-pointers)

- Accessing an array out-of-bounds (for example due to the famous off-by-one errors)

- Wrong calculations

Examples of runtime errors are:

- Trying to access a non-existing file (or insufficient privileges to access the file)

- A dropped network connection

- Insufficient working memory

- Wrong user input

We can now classify languages by the mechanisms they provide to deal with logical and runtime errors. For runtime errors, a reliable language will have robust mechanisms to deal with a large range of possible errors and will make it easy to deal with these errors. We will learn more about this in chapter 5 when we talk about error handling. Preventing logical errors is a lot harder, but there are also many different mechanisms which can help and make a language more reliable. In the next section for example, we will learn how the Rust type system makes certain kinds of logical errors impossible to write in Rust.

A last important concept, which plays a large role in systems programming, is that of determinism. One part of being reliable as a system is that the system gives expected behaviour repeatedly. Intuitively, one might think that every program should behave in this way, giving the same results when executed multiple times with the same parameters. While this is true in principle, in practice not all parameters of a program can be specified by the user. On a modern multi-processor system, the operating system for example determines at which point in time your program is executed on which logical core. Here, it might compete with other programs that are currently running on your system in a chaotic, unpredictable manner. Even disregarding the operating system, a programming language might contain unpredictable parts, such as a garbage collector which periodically (but unpredictably) interrupts the program to free up unused but reserved memory. Determinism is especially important in time-critical systems (so-called real-time systems), where code has to execute in a predictable timespan. This ranges from soft real-time systems, such as video games which have to hit a certain target framerate in order to provide good user experience, to hard real-time systems, such as the circuit that triggers the airbag in your car in case of an emergency.

It is worth pointing out that, in principle, all of the given examples still constitute deterministic behaviour, however the amount of information required to make a useful prediction in those systems is so large that any such prediction is in practice not feasible. Many programs thus constitute chaotic systems: Systems that are in principle deterministic, but are so sensitive to even a small change in input conditions that their behaviour cannot be accurately predicted. Luckily, most software still behaves deterministically on the macroscopic scale, even if it exhibits chaotic behaviour on the microscopic scale.

The concept of programming paradigms

In order to obtain good performance, usability or reliability, there are certain patterns in programming language design that can be used. Since programming languages are abstractions over computation, a natural question that arises is: "What are good abstractions for computation?" Here, over the last decades, several patterns have emerged that turned out to be immensely useful for writing fast, efficient, concise and powerful code. These patterns are called programming paradigms and can be used to classify the features of a programming language. Perhaps one of the most well-known paradigms is object-oriented programming. It refers to a way of structuring data and functions together in functional units called objects. While object-oriented programming is often marketed as a "natural" way of writing code, by modeling it as you would model relationships between entities in the real world, it is far from the only programming paradigm. In the next couple of sections, we will look at the most important programming paradigms in use today.

The most important programming paradigms

In the context of systems programming, there are several programming paradigms which are especially important. These are: Imperative programming, object-oriented programming, functional programming, generic programming, and concurrent programming. Of course, there are many other programming paradigms in use today, for a comprehensive survey the paper "Programming Paradigms for Dummies: What Every Programmer Should Know" by Peter Van Roy {{#cite Roy09}} is a good starting point.

Imperative

Imperative programming refers to a way of programming in which statements modify state and express control flow. Here is a small example written in Rust:

#![allow(unused)] fn main() { fn collatz(mut n: u32) { loop { if n % 2 == 0 { n /= 2; } else { n = 3 * n + 1; } } } }

This code computes the famous Collatz sequence and illustrates the key concepts of imperative programming. It defines some state (the variable n) that is modified using statements (conditionals, such as if and else, and assignments through =). The statements define the control flow of the program, which can be thought of as the sequence of instructions that are executed when your program runs. In this regard, imperative programming is a way of defining how a program should achieve its desired result.

Imperative programming might feel very natural to many programmers, especially when starting out to learn programming. It is the classic "Do this, then that" way of telling a computer how to behave. Indeed, most modern hardware architectures are imperative in nature, as the low-level machine instructions are run one after another, each acting on and potentially modifying some state. As this style of programming closely resembles the way that processors execute code, it has become a natural choice for writing systems software. Most systems programming languages that are in use today thus use the imperative programming paradigm to some extent.

The opposite of imperative programming is called declarative programming. If imperative programming focuses on how things are to be achieved, declarative programming focuses on what should be achieved. To illustrate the declarative programming style, it pays of to take a look at mathematical statements, which are inherently declarative in nature:

f(x)=x²

This simple statement expresses the idea that "There is some function f(x) whose value is x²". It describes what things are, not how they are achieved. The imperative equivalent of this statement might be something like this:

#![allow(unused)] fn main() { fn f_x(x: u32) -> u32 { x * x } }

Here, we describe how we achieve the desired result (f(x) is achieved by multiplying x by itself). While this difference might seem pedantic at first, it has large implications for the way we write our programs. One specific form of declarative programming is called functional programming, which we will introduce in just a bit.

Object-Oriented

The next important programming paradigm is the famous object-oriented programming (OOP). The basic idea of object-oriented programming is to combine state and functions into functional units called objects. OOP builds on the concept of information hiding, where the inner workings of an object are hidden to its users. Here is a short example of object-oriented code, this time written in C++:

#include <iostream>

#include <string>

class Cat {

std::string _name;

bool _is_angry;

public:

Cat(std::string name, bool is_angry) : _name(std::move(name)), _is_angry(is_angry) {}

void pet() const {

std::cout << "Petting the cat " << _name << std::endl;

if(_is_angry) {

std::cout << "*hiss* How dare you touch me?" << std::endl;

} else {

std::cout << "*purr* This is... acceptable." << std::endl;

}

}

};

int main() {

Cat cat1{"Milo", false};

Cat cat2{"Jack", true};

cat1.pet();

cat2.pet();

}

In OOP, we hide internal state in objects and only interact with them through a well-defined set of functions on the object. The technical term for this is encapsulation, which is an important idea to keep larger code bases from becoming confusing and hard to maintain. Besides encapsulation, OOP introduces two more concepts that are important: Inheritance, and Polymorphism.

Inheritance refers to a way of sharing functionality and state between multiple objects. By inheriting from an objectTechnically, classes inherit from other classes, but it does not really matter here., another object gains access to the state and functionality of the base object, without having to redefine these state and functionalities. Inheritance thus aims to reduce code duplication.

Polymorphism goes a step further and allows objects to serve as templates for specific behaviour. This is perhaps the most well-known example of object-oriented code, where common state or behaviour of a class of entities is lifted into a common base type. The base type defines what can be done with these objects, each specific type of object then defines how this action is done. We will learn more about different types of polymorphism in section 2.5, for now a single example will suffice:

#include <iostream>

#include <memory>

struct Shape {

virtual ~Shape() {};

virtual double area() const = 0;

};

class Circle : Shape {

double radius;

public:

explicit Circle(double radius) : radius(radius) {}

double area() const override {

return 3.14159 * radius * radius;

}

};

class Square : Shape {

double sidelength;

public:

explicit Square(double sidelength) : sidelength(sidelength) {}

double area() const override {

return sidelength * sidelength;

}

};

int main() {

auto shape1 = std::make_unique<Circle>(10.0);

auto shape2 = std::make_unique<Square>(5.0);

std::cout << "Area of shape1: " << shape1->area() << std::endl;

std::cout << "Area of shape2: " << shape2->area() << std::endl;

}

OOP became quite popular in the 1980s and 1990s and still to this day is one of the most widely adopted programming paradigms. It has arguably more importance in applications programming than in systems programming (many systems software is written in C, a non-object-oriented language), but its overall importance and impact to programming as a whole make it worth knowing. In particular, the core concepts of OOP (encapsulation, inheritance, polymorphism) can be found within other programming paradigms as well, albeit in different flavours. In particular, Rust is not considered to use the OOP paradigm, but it still supports encapsulation and polymorphism, as we will see in later chapters.

It is worth noting that the popularity of OOP is perhaps more due to its history than due to its practical usefulness today. OOP has been heavily criticised time and again, and modern programming languages increasingly tend to move away from it. This is in large part due to the downsides of OOP: The immense success of OOP after its introduction for a time led many programmers to use it as a one-size-fits-all solution, designing large class hierarchies that quickly become unmaintainable. Perhaps most importantly, OOP does not map well onto modern hardware architectures. Advances in computational power over the last decade were mostly due to increased support for concurrent computing, not so much due to an increase in sequential execution speed. To make good use of modern multi-core processors, programming languages require solid support for concurrent programming. OOP is notoriously bad at this, as the information-hiding principle employed by typical OOP code does not lend itself well to parallelization of computations. This is where concepts such as functional programming and specifically concurrent programming come into play.

Functional

We already briefly looked at declarative programming, which is the umbrella term for all programming paradigms that focus on what things are, instead of how things are done. Functional programming (FP) is one of the most important programming paradigms from the latter domain. With roots deep within mathematics and theoretical computer science, it has seen an increase in popularity over the last decade due to its elegance, efficiency and usefulness for writing concurrent code.

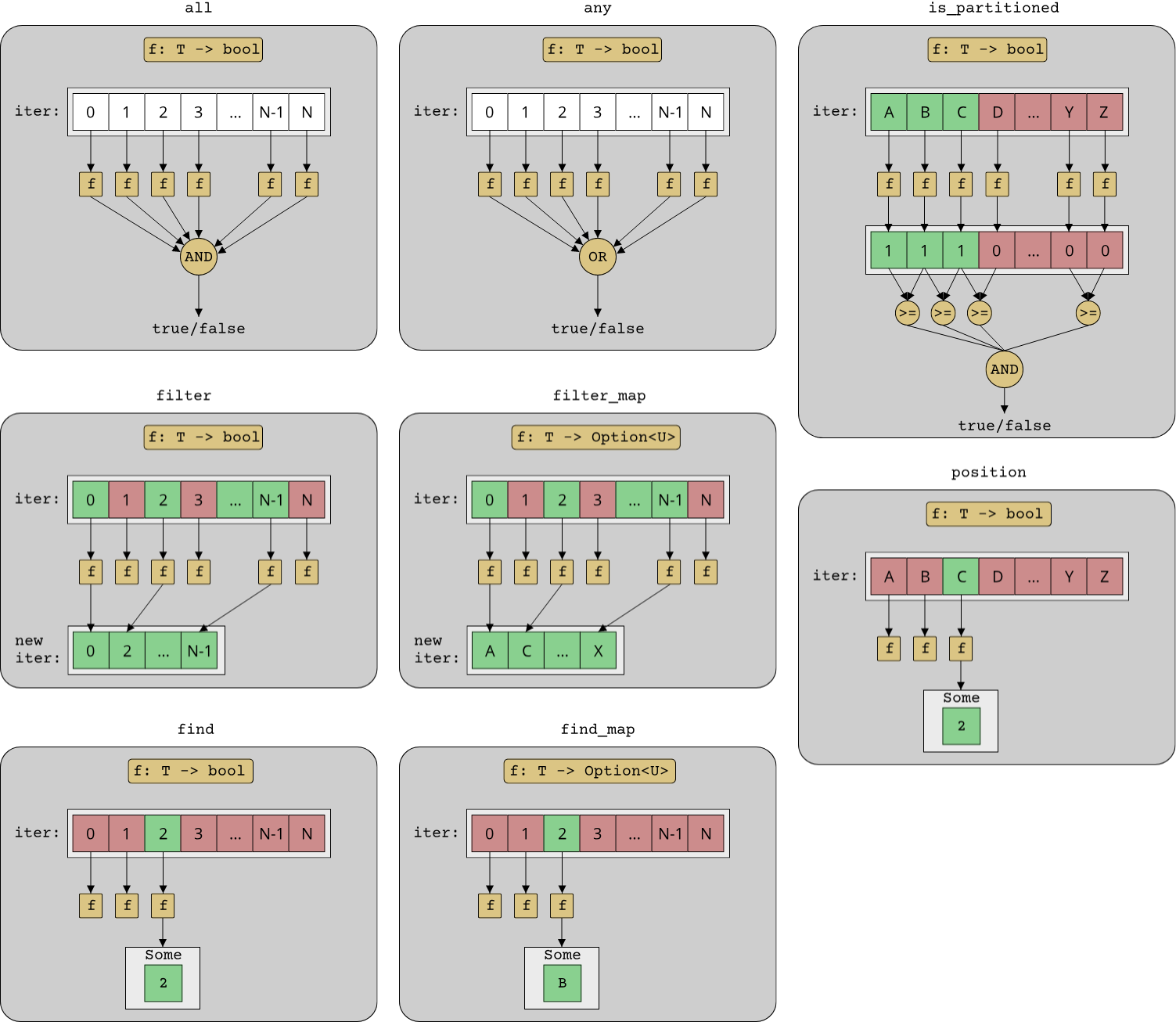

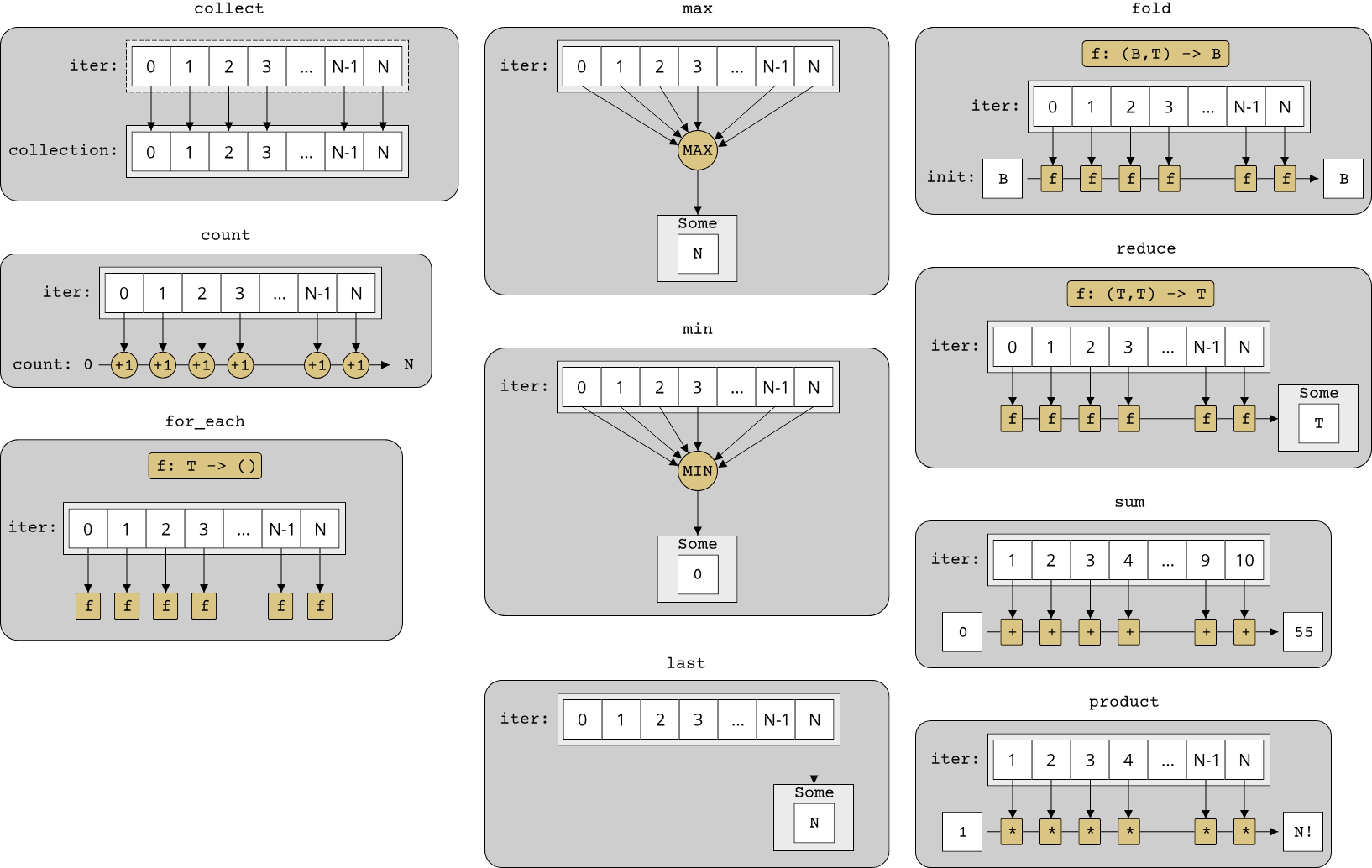

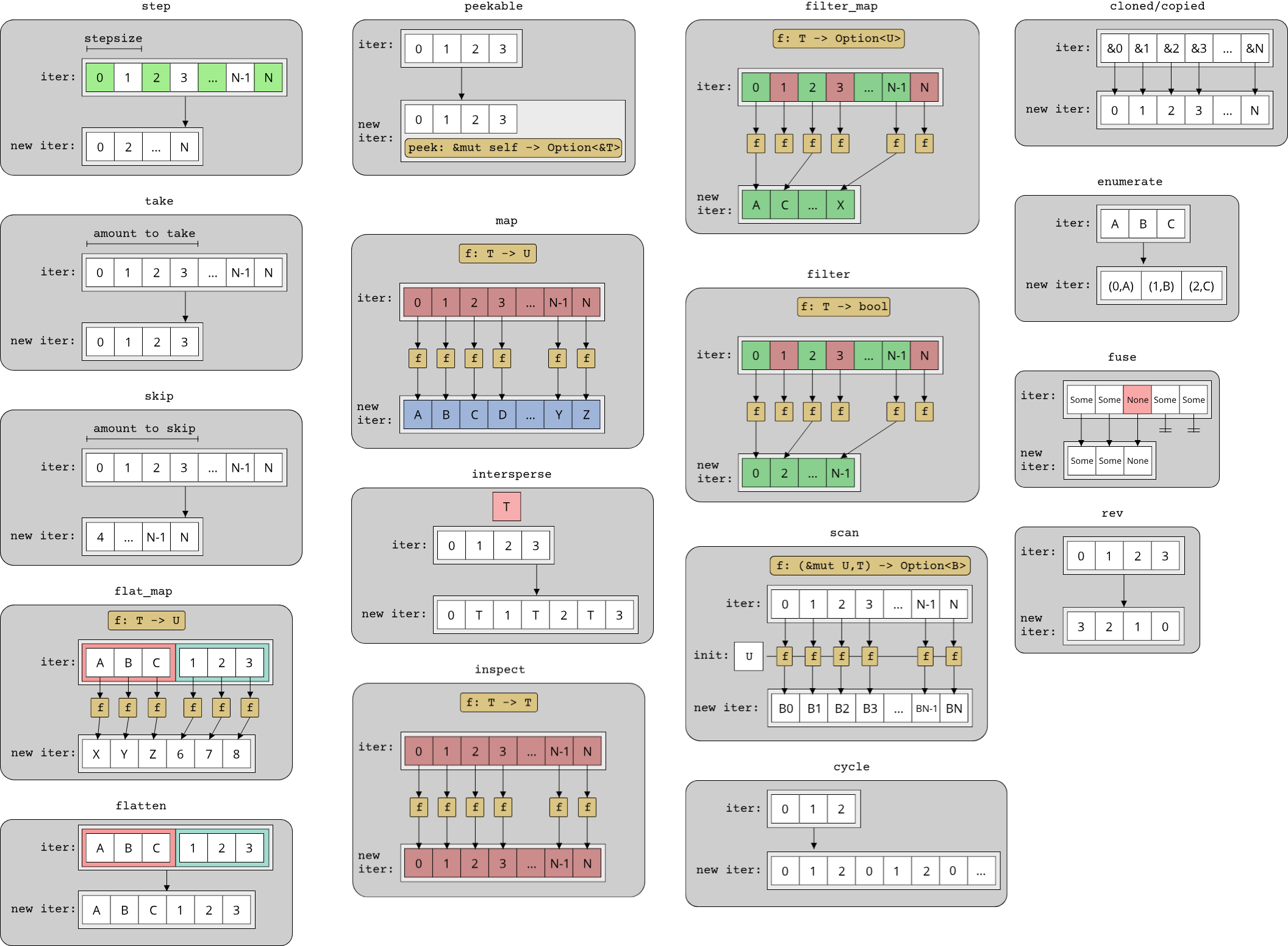

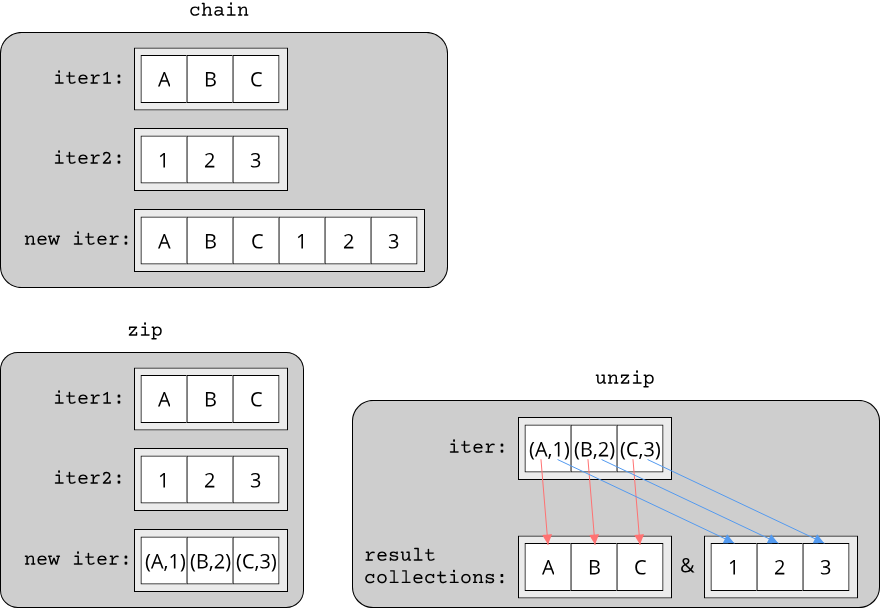

FP generally refers to programs which are written through the application and composition of functions. Functions are of course a common concept in most programming languages, what makes functional programming stand out is that it treats functions as "first-class citizens". This means that functions share the same characteristics as data, namely that they can be passed around as arguments, assigned to variables and stored in collections. A function that takes another function as an argument is called a higher-order function, a concept which is crucial to the success of the FP paradigm. Many of the most common operations in programming can be elegantly solved through the usage of higher-order functions. In particular, all algorithms that use some form of iteration over elements in a container are good candidates: Sorting a collection, searching for an element in a collection, filtering elements from a collection, transforming elements from one type into another type etc. The following example illustrates the application of functional programming in Rust:

#![allow(unused)] fn main() { struct Student { pub id: String, pub gpa: f64, pub courses: Vec<String>, } fn which_courses_are_easy(students: &[Student]) -> HashSet<String> { students .iter() .filter(|student| student.gpa >= 3.0) .flat_map(|student| student.courses.clone()) .collect() } }

Here, we have a collection of Students and want to figure out which courses might be easy. The naive way to do this is to look at the best students (all those with a GPA >= 3) and collect all the courses that these students took. In functional programming, these operations - finding elements in a collection, converting elements from one type to another etc. - are higher-order functions with specific names that make them read almost like an english sentence: "Iterate over all students, filter for those with a GPA >= 3 and map (convert) them to the list of courses. Then collect everything at the end." Notice that the filter and flat_map functions (which is a special variant of map that collapses collections of collections into a single level) take another function as their argument. In this way, these functions are composable and general-purpose. Changing the search criterion in the filter call is equal to passing a different function to filter:

#![allow(unused)] fn main() { filter(|student| student.id.starts_with("700")) }

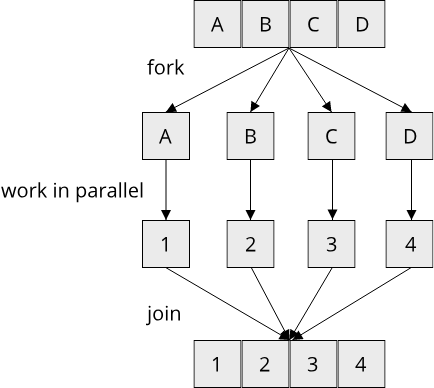

All this can of course be achieved with the imperative way of programming as well: Create a counter that loops from zero to the number of elements in the collection minus one, access the element in the collection at the current index, check the first condition (GPA >= 3) with an if-statement, continue-ing if the condition is not met etc. While there are many arguments for functional programming, such as that it produces prettier code that is easier to understand and maintain, there is one argument that is especially important in the context of systems programming. Functional programming, by its nature, makes it easy to write concurrent code (i.e. code that can be run in parallel on multiple processor cores). In Rust, using a library called rayon, we can run the same code as before in parallel by adding just 4 characters:

#![allow(unused)] fn main() { fn which_courses_are_easy(students: &[Student]) -> HashSet<String> { students .par_iter() .filter(|student| student.gpa >= 3.0) .flat_map(|student| student.courses.clone()) .collect() } }

We will learn a lot more about writing concurrent code in Rust in chapter 7, for now it is sufficient to note that functional programming is one of the core programming paradigms which make writing concurrent code easy.

As a closing note to this section, there are also languages that are purely functional, such as Haskell. Functions in a purely functional programming language must not have side-effects, that is they must not modify any global state. Any function for which this condition holds is called a pure function (hence the name purely function language). Pure functions are an important concept that we will examine more closely when we will learn about concurrency in systems programming.

Generic

Another important programming paradigm is called generic programming. In generic programming, algorithms and datastructures can be implemented without knowing the specific types that they operate on. Generic programming thus is immensely helpful in preventing code duplication. Code can be written once in a generic way, which is then specialized (instantiated) using specific types. The following Rust-Code illustrates the concept of a generic container class:

use std::fmt::{Display, Formatter}; struct PrettyContainer<T: Display> { val: T, } impl<T: Display> PrettyContainer<T> { pub fn new(val: T) -> Self { Self { val } } } impl<T: Display> Display for PrettyContainer<T> { fn fmt(&self, f: &mut Formatter<'_>) -> std::fmt::Result { write!(f, "~~~{}~~~", self.val) } } fn main() { // Type annotations are here for clarity let container1 : PrettyContainer<i32> = PrettyContainer::new(42); let container2 : PrettyContainer<&str> = PrettyContainer::new("hello"); println!("{}", container1); println!("{}", container2); }

Here, we create a container called PrettyContainer which is generic over some arbitrary type T. The purpose of this container is to wrap arbitrary values and print them in a pretty way. To make this work, we constrain our generic type T, requiring it to have the necessary functionality for being displayed (e.g. written to the standard output).

Generic programming enables us to write the necessary code just once and then use our container with various different types, such as i32 and &str in this example. Generic programming is closely related to the concept of polymorphism that we learned about in the section on object-oriented programming. We will cover generics in the context of type systems more closely in the next two chapters.

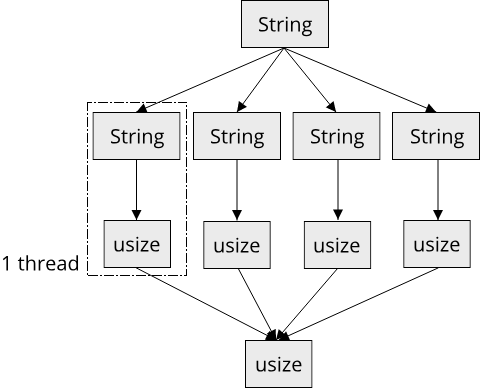

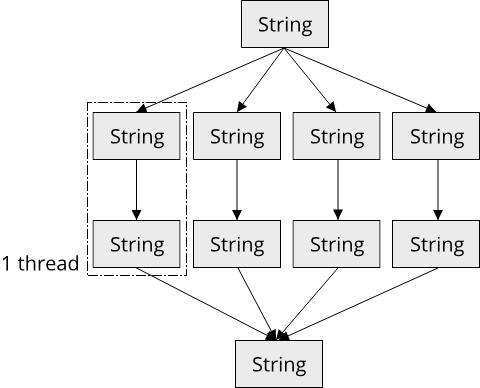

Concurrent

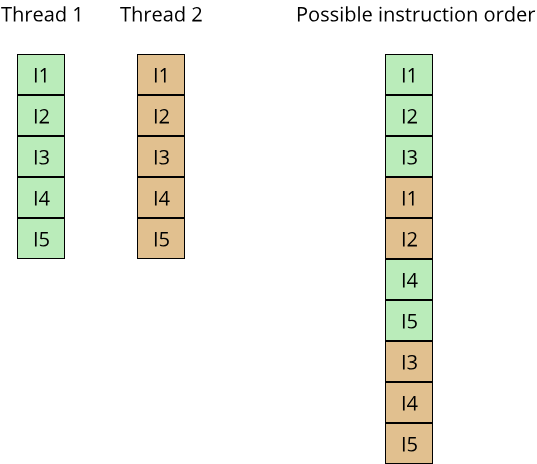

The last important programming paradigm that we will look at is concurrent programming. We already saw an example for writing code that makes use of multiple processor cores in the section on functional programming. Concurrent programming goes a step further and includes various mechanisms for writing concurrent code. At this point, we first have to understand an important distinction between two terms: Concurrency and Parallelism. Concurrency refers to multiple processes running during the same time periods, whereas parallelism refers to multiple processes running at the same time. An example of concurrency in real-life is university. Over the course of a semester (the time period), one student will typical be enrolled in multiple courses, making progress on all of them (ideally finishing them by the end of the semester). At no point in time, however, did the student sit in two lectures at the same timeWith online courses during the pandemic, things might be different though. Time-turners might also be a way to go.. An example of parallelism is during studying. A student can study for an exam while, at the same time, listening to some music. These two processes (studying and music) run at the same time, thus they are parallel.

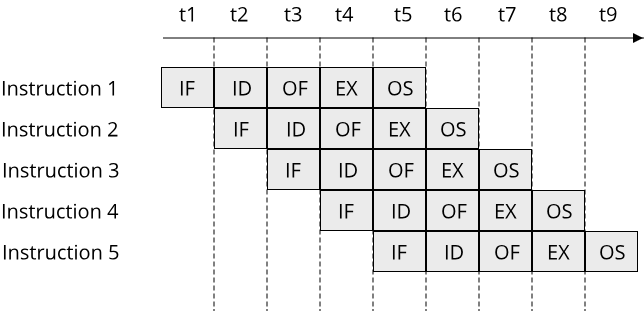

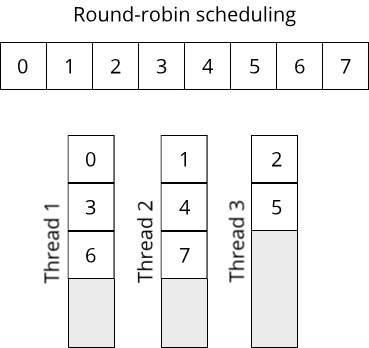

Concurrency thus can be seen as a weaker, more general form of parallelism. An interesting piece of history is that concurrency was employed in operating systems way before multi-core processors became commonplace. This allowed users to run multiple pieces of software seemingly at the same time, all one just one processor. The illusion of parallelism was achieved through a process called time slicing, where each program ran exclusively on the single processor core for only a few milliseconds before being replaced by the next program. This rapid switching between programs gave the illusion that multiple things were happening at the same time.

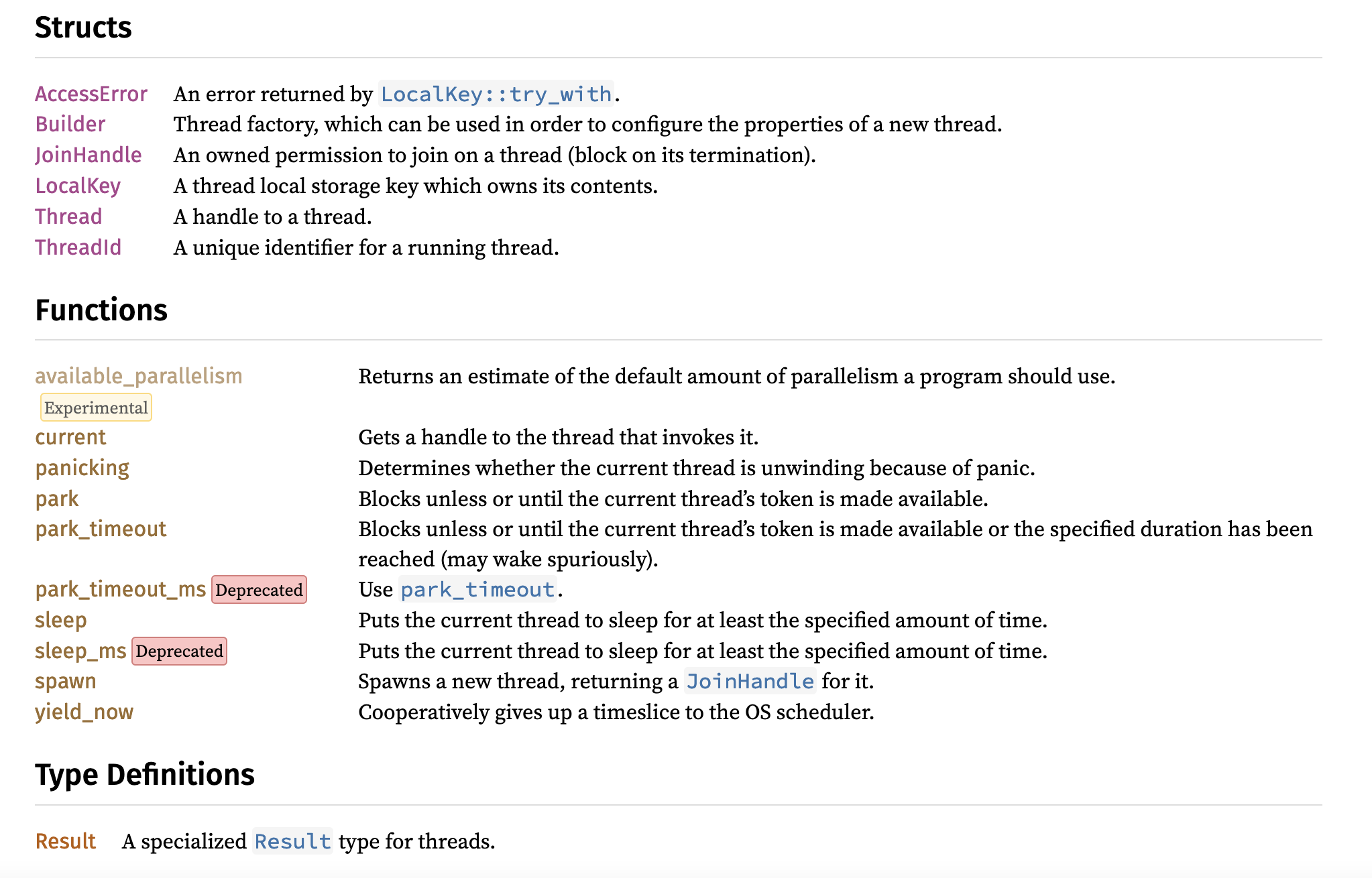

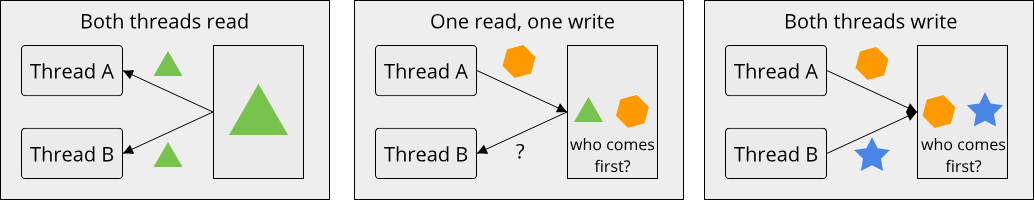

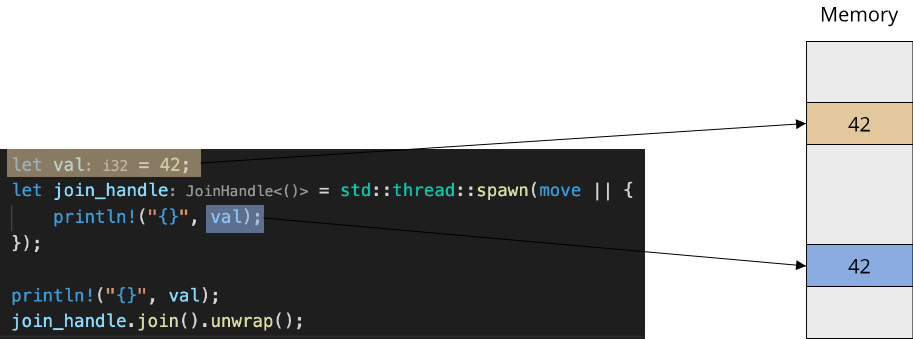

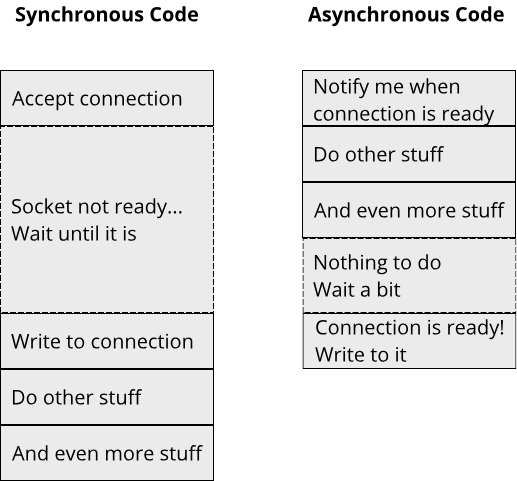

Concurrency is a very important programming paradigm nowadays because it is central for achieving good performance and interactivity in complex applications. At the same time, concurrency means that multiple operations can be in flight at the same time, resulting in asynchronous execution and often non-deterministic behaviour. This makes writing concurrent code generally more difficult than writing sequential code (for example with imperative programming). Especially when employing parallelism, where multiple things can happen at the same instant in time, a whole new class of programming errors becomes possible due to multiple operations interfering with each other. To reduce the mental load of progammers when writing concurrent or parallel code, and to prevent some of these programming errors, concurrent programming employs many powerful abstractions. We will learn more about these abstractions when we talk about fearless concurrency in chapter 7. For now, here is a short example form the Rust documentation that illustrates a simple form of concurrency using the thread abstraction:

use std::thread; use std::time::Duration; fn main() { thread::spawn(|| { for i in 1..10 { println!("hi number {} from the spawned thread!", i); thread::sleep(Duration::from_millis(1)); } }); for i in 1..5 { println!("hi number {} from the main thread!", i); thread::sleep(Duration::from_millis(1)); } }

Running this example multiple times illustrates the non-deterministic nature of concurrent code, with each run of the program potentially producing a different order of the print statements.

The programming paradigms used by Rust

Now that we know some of the most important programming paradigms and their importance for systems programming, we can take a quick look at the Rust programming language again. As you might have guessed while reading the previous sections, many programming languages use multiple programming paradigms at the same time. These languages are called multi-paradigm languages. This includes languages such as C++, Java, Python, and also Rust. Here is a list of the main programming paradigms that Rust uses:

- Imperative

- Functional

- Generic

- Concurrent

Additionally, Rust is not an object-oriented programming language. This makes it stand out somewhat from most of the other languages that are usually taught in undergraduate courses at universities, with the exception of C, which is also not object-oriented. Comparing C to Rust is an interesting comparison in the context of systems programming, because C is one of the most widely used systems programming languages, even though from a modern point of view, it is lacking many convenience features that one might be used to from other languages. There is plenty of discussion regarding the necessity of "fancy" programming features, and some people will argue that one can write perfectly fine systems code in C (as the Linux kernel demonstrates). While this is certainly true (people also used to write working programs in assembly language for a long time), a modern systems programming language such as Rust might be more appealing to a wider range of developers, from students just starting to learn programming, to experienced programmers who have been scared by unreadable C++-template-code in the past.

Feature comparison between Rust, C++, Java, Python etc.

We shall conclude this section with a small feature comparison of Rust and a bunch of other popular programming languages in use today:

| Language | Imperative | Object-oriented | Functional | Generic | Concurrent | Other notable features |

|---|---|---|---|---|---|---|

| Rust | Yes | No | Yes (impure) | Yes (type-classes) | Yes (threads, async) | Memory-safety through ownership semantics |

| C++ | Yes | Yes (class-based) | Yes (impure) | Yes (templates) | Yes (threads since C++11, coroutines since C++20) | Metaprogramming through templates |

| C | Yes | No | No | No | No (but possible through OS-dependent APIs, such as pthreads) | Metaprogramming through preprocessor |

| Java | Yes | Yes (class-based) | Yes (impure) | Yes (type-erasure) | Yes (threads) | Supports runtime reflection |

| Python | Yes | Yes (class-based) | Yes (impure) | No (dynamically typed) | Yes (threads, coroutines) | Dynamically typed scripting language |

| JavaScript | Yes | Yes (prototype-based) | Yes (impure) | No (dynamically typed) | Yes (async) | Uses event-driven programming |

| C# | Yes | Yes (class-based) | Yes (impure) | Yes (type substitution at runtime) | Yes (threads, coroutines) | One of the most paradigm-rich programming languages in use today |

| Haskell | No | No | Yes (pure) | Yes (type-classes) | Yes (various methods available) | A very powerful type system |

It is worth noting that most languages undergo a constant process of evolution and development themselves. C++ has seen significant change over the last decade, starting a three-year release cycle with C++11 in 2011, with the current C++ version depicted here. Rust has a much faster release cycle of just six weeks, new versions and their changelogs can be found here.

Recap

In this chapter, we learned about programming paradigms. We saw how certain patterns for designing a programming language can aid in writing faster, more robust, more maintainable code. We learned about five important programming paradigms: Imperative programming, object-oriented programming, functional programming, generic programming and concurrent programming. We saw some examples of Rust and C++ code for these paradigms and learned that Rust supports most of these paradigms, with the exception of object-oriented programming. We concluded with a feature comparison of several popular programming languages.

In the next chapter, we will dive deeper into Rust and take a look at the type system of Rust. Here, we will learn why strongly typed languages are often preferred in systems programming.

Questions and exercises

❓ Question

Why do all of the languages introduced in this chapter use multiple programming paradigms instead of a single one?

💡 Click to show the answer

Each programming paradigm has something unique to offer in terms of usability, performance, robustness etc. It is possible to support more than one paradigm within a single language, so we can make languages more expressive by doing so. Adding too many paradigms can also result in a language that is harder to understand, as there are too many different ways of doing the same thing.

❓ Question

Why do almost all modern programming languages use the concurrent programming paradigm?

💡 Click to show the answer

Since Moore's Law doesn't hold anymore, doing things concurrently has been one of the major ways for improving program performance over the last two decades. Without a way to access concurrency on our machines, a language will thus be very limited when it comes to writing fast code.

❓ Question

What is the main benefit of generic programming? If you ever wrote C code, how did you handle data structures working with multiple different types?

💡 Click to show the answer

Generic code prevents code duplication. Most generic programming approaches also maintain type-safety. This is less error-prone and generally simpler to reason about than methods for code reuse such as the C/C++ preprocessor, which operates purely textual.

🔤 Exercise 2.2.3

Write a small program that sorts a large number of integers (e.g. 1 million) in both C/C++ and Python. Measure the runtime of both programs. What do you observe?

💡 Need help? Click here

Hint: You can measure whole-program execution time on the command line using e.g. time on Unix-systems: time my_program. Many languages also provide ways of measuring time with high-precision from within the source code, e.g. through std::chrono::high_resolution_clock in C++ or time.perf_counter() in Python.

🔤 Exercise 2.2.4

Write a C++ program that implements the same algorithm in three different styles: Imperative, object-oriented, and functional. Calculating fibonacci numbers or finding even numbers in an array are good candidates. What do you observe?

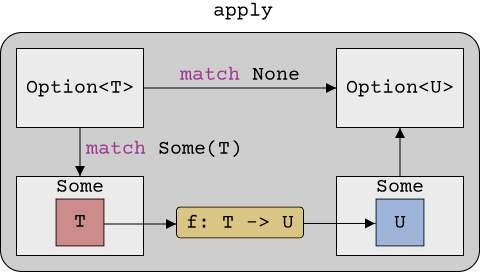

💡 Need help? Click here